The History of Anesthesia

Adam K. Jacob

Sandra L. Kopp

Douglas R. Bacon

Hugh M. Smith

Key Points

Related Matter

The Ether Monument

The Ether Controversy

ASA Seal

Anesthesiologists are like no other physicians: We are experts at controlling the airway and at emergency resuscitation; we are real-time cardiopulmonologists achieving hemodynamic and respiratory stability for the anesthetized patient; we are pharmacologists and physiologists, calculating appropriate doses and desired responses; we are gurus of postoperative care and patient safety; we are internists performing perianesthetic medical evaluations; we are the pain experts across all medical disciplines and apply specialized techniques in pain clinics and labor wards; we manage the severely sick and injured in critical care units; we are neurologists, selectively blocking sympathetic, sensory, or motor functions with our regional techniques; we are trained researchers exploring scientific mystery and clinical phenomenon.

Anesthesiology is an amalgam of specialized techniques, equipment, drugs, and knowledge that, like the growth rings of a tree, have built up over time. Current anesthesia practice is the summation of individual effort and fortuitous discovery of centuries. Every component of modern anesthesia was at some point a new discovery and reflects the experience, knowledge, and inventiveness of our predecessors. Historical examination enables understanding of how these individual components of anesthesia evolved. Knowledge of the history of anesthesia enhances our appreciation of current practice and intimates where our specialty might be headed.

Anesthesia Before Ether

Physical and Psychological Anesthesia

The Edwin Smith Surgical Papyrus, the oldest known written surgical document, describes 48 cases performed by an Egyptian surgeon from 3000 to 2500 BC. While this remarkable surgical treatise contains no direct mention of measures to lessen patient pain or suffering, Egyptian pictographs from the same era show a surgeon compressing a nerve in a patient’s antecubital fossa while operating on the patient’s hand. Another image displays a patient compressing his own brachial plexus while a procedure is performed on his palm.3 In the sixteenth century, military surgeon Ambroise Paré became adept at nerve compression as a means of creating anesthesia.

Medical science has benefited from the natural refrigerating properties of ice and snow as well. For centuries anatomical dissections were performed only in winter because colder temperatures delayed deterioration of the cadaver, and in the Middle Ages the anesthetic effects of cold water and ice were recognized. In the seventeenth century, Marco Aurelio Severino described the technique of “refrigeration anesthesia” in which snow was placed in parallel lines across the incisional plane such that the surgical site became insensate within minutes. The technique never became widely used, likely because of the challenge of maintaining stores of snow year-round.4 Severino is also known to have saved numerous lives during an epidemic of diphtheria by performing tracheostomies and inserting trocars to maintain patency of the airway.5

Formal manipulation of the psyche to relieve surgical pain was undertaken by French physicians Charles Dupotet and Jules Cloquet in the late 1820s with hypnosis, then called mesmerism. Although the work of Anton Mesmer was discredited by the French Academy of Science after formal inquiry several decades earlier, proponents like Dupotet and Cloquet continued with mesmeric experiments and pleaded to the Academie de Medicine to reconsider its utility.6 In a well-attended demonstration in 1828, Cloquet removed the breast of a 64-year-old patient while she reportedly remained in a calm, mesmeric sleep. This demonstration made a lasting impression on British physician John Elliotson, who became a leading figure of the mesmeric movement in England in the 1830s and 1840s. Innovative and quick to adopt new advances, Elliotson performed mesmeric demonstrations and in 1843 published Numerous Cases of Surgical Operations Without Pain in the Mesmeric State. Support for mesmerism faded when in 1846 renowned surgeon Robert Liston performed the first operation using ether anesthesia in England and remarked, “This Yankee dodge beats mesmerism all hollow”.7

Early Analgesics and Soporifics

Dioscorides, a Greek physician from the first century AD, commented on the analgesia of mandragora, a drug prepared from the bark and leaves of the mandrake plant. He observed that the plant substance could be boiled in wine, strained, and used “in the case of persons … about to be cut or cauterized, when they wish to produce anesthesia”.8 Mandragora was still being used to benefit patients as late as the seventeenth century. From the ninth to the thirteenth centuries, the soporific sponge was a dominant mode of providing pain relief during surgery. Mandrake leaves, along with black nightshade, poppies, and other herbs, were boiled together and cooked onto a sponge. The sponge was then reconstituted in hot water and placed under the patient’s nose before surgery. Prior to the hypodermic syringe and routine venous access, ingestion and inhalation were the only known routes for administering medicines to gain systemic effects. Prepared as indicated by published reports of the time, the sponge generally contained morphine and scopolamine in varying amounts—drugs used in modern anesthesia.9

Alcohol was another element of the pre-ether armamentarium because it was thought to induce stupor and blunt the impact of pain. Although alcohol is a central nervous system depressant, in the amounts administered it produced little analgesia in the setting of true surgical pain. Fanny Burney’s account underscores the ineffectiveness of alcohol as an anesthetic. Not only did alcohol

provide minimal pain control, it did nothing to dull her recollection of events. Laudanum was an alcohol-based solution of opium first compounded by Paracelsus in the sixteenth century. It was wildly popular in the Victorian and Romantic periods and prescribed for a wide variety of ailments from the common cold to tuberculosis. Although appropriately used as an analgesic in some instances, it was frequently misused and abused. Laudanum was given by nursemaids to quiet wailing infants and abused by many upper-class women, poets, and artists who fell victim to its addictive potential.

provide minimal pain control, it did nothing to dull her recollection of events. Laudanum was an alcohol-based solution of opium first compounded by Paracelsus in the sixteenth century. It was wildly popular in the Victorian and Romantic periods and prescribed for a wide variety of ailments from the common cold to tuberculosis. Although appropriately used as an analgesic in some instances, it was frequently misused and abused. Laudanum was given by nursemaids to quiet wailing infants and abused by many upper-class women, poets, and artists who fell victim to its addictive potential.

Inhaled Anesthetics

Nitrous oxide was known for its ability to induce lightheadedness and was often inhaled by those seeking a thrill. It was not used for this purpose as frequently as ether because it was more difficult to synthesize and store. It was made by heating ammonium nitrate in the presence of iron filings. The evolved gas was passed through water to eliminate toxic oxides of nitrogen before being stored. Nitrous oxide was first prepared in 1773 by Joseph Priestley, an English clergyman and scientist, who ranks among the great pioneers of chemistry. Without formal scientific training, Priestley prepared and examined several gases, including nitrous oxide, ammonia, sulfur dioxide, oxygen, carbon monoxide, and carbon dioxide.

At the end of the eighteenth century in England, there was a strong interest in the supposed wholesome effects of mineral water and gases, particularly with regard to treatment of scurvy, tuberculosis, and other diseases. Thomas Beddoes opened his Pneumatic Institute close to the small spa of Hotwells, in the city of Bristol, to study the beneficial effects of inhaled gases. He hired Humphry Davy in 1798 to conduct research projects for the institute. Davy performed brilliant investigations of several gases but focused much of his attention on nitrous oxide. His human experimental results, combined with research on the physical properties of the gas, were published in Nitrous Oxide, a 580-page book published in 1800. This impressive treatise is now best remembered for a few incidental observations. Davy commented that nitrous oxide transiently relieved a severe headache, obliterated a minor headache, and briefly quenched an aggravating toothache. The most frequently quoted passage was a casual entry: “As nitrous oxide in its extensive operation appears capable of destroying physical pain, it may probably be used with advantage during surgical operations in which no great effusion of blood takes place”.10 This is perhaps the most famous of the “missed opportunities” to discover surgical anesthesia. Davy’s lasting nitrous oxide legacy was coining the phrase “laughing gas” to describe its unique property.

Almost Discovery: Hickman, Clarke, Long, and Wells

The discovery of surgical anesthetics in the modern era remains linked to inhaled anesthetics. The compound now known as diethyl ether had been known for centuries; it may have been synthesized first by an eighth-century Arabian philosopher Jabir ibn Hayyan or possibly by Raymond Lully, a thirteenth century European alchemist. But diethyl ether was certainly known in the sixteenth century, to both Valerius Cordus and Paracelsus who prepared it by distilling sulfuric acid (oil of vitriol) with fortified wine to produce an oleum vitrioli dulce (sweet oil of vitriol). One of the first “missed” observations on the effects of inhaled agents, Paracelsus observed that ether caused chickens to fall asleep and awaken unharmed. He must have been aware of its analgesic qualities because he reported that it could be recommended for use in painful illnesses.

For three centuries thereafter, this simple compound remained a therapeutic agent with only occasional use. Some of its properties were examined but without sustained interest by distinguished British scientists Robert Boyle, Isaac Newton, and Michael Faraday, none of whom made the conceptual link to surgical anesthesia. Its only routine application came as an inexpensive recreational drug among the poor of Britain and Ireland, who sometimes drank an ounce or two of ether when taxes made gin prohibitively expensive.13 An American variation of this practice was conducted by groups of students who held ether-soaked towels to their faces at nocturnal “ether frolics.”

William E. Clarke, a medical student from Rochester, New York, may have given the first ether anesthetic in January 1842. From techniques learned as a chemistry student in 1839, Clarke entertained his companions with nitrous oxide and ether. Emboldened by these experiences, he administered ether, from a towel, to a young woman named Hobbie. One of her teeth was then extracted without pain by a dentist named Elijah Pope.14 However, it was suggested that the woman’s unconsciousness was due to hysteria and Clarke was advised to conduct no further anesthetic experiments.15

Two months later, on March 30, 1842, Crawford Williamson Long administered ether with a towel for surgical anesthesia in Jefferson, Georgia. His patient, James M. Venable, was a young man who was already familiar with ether’s exhilarating effects, for he reported in a certificate that he had previously inhaled it and was fond of its use. Venable had two small tumors on his neck but refused to have them excised because he feared the pain that accompanied surgery. Knowing that Venable was familiar with ether’s action, Dr. Long proposed that ether might alleviate pain and gained his patient’s consent to proceed. After inhaling ether from the towel and having the procedure successfully completed, Venable reported that he was unaware of the removal of the tumors.16 In determining the first fee for anesthesia and surgery, Long settled on a charge of $2.00.17

A common mid-nineteenth century problem facing dentists was that patients refused beneficial treatment of their teeth for fear of the pain of the procedure. From a dentist’s perspective, pain was not so much life-threatening as it was livelihood-threatening. One of the first dentists to engender a solution was Horace Wells of Hartford, Connecticut, whose great moment of discovery came on December 10, 1844. He observed a lecture-exhibition on nitrous oxide by an itinerant “scientist,” Gardner Quincy Colton, who encouraged members of the audience to inhale a sample of the gas. Wells observed a young man injure his leg without pain while under the influence of nitrous oxide. Sensing that it might provide pain relief during dental procedures, Wells contacted Colton and boldly proposed an experiment in which Wells was to be the subject. The following day, Colton gave Wells nitrous oxide before

a fellow dentist, William Riggs, extracted a tooth.18 Afterward Wells declared that he had not felt any pain and deemed the experiment a success. Colton taught Wells to prepare nitrous oxide, which the dentist administered with success to patients in his practice. His apparatus probably resembled that used by Colton: A wooden tube placed in the mouth through which nitrous oxide was breathed from a small bag filled with the gas.

a fellow dentist, William Riggs, extracted a tooth.18 Afterward Wells declared that he had not felt any pain and deemed the experiment a success. Colton taught Wells to prepare nitrous oxide, which the dentist administered with success to patients in his practice. His apparatus probably resembled that used by Colton: A wooden tube placed in the mouth through which nitrous oxide was breathed from a small bag filled with the gas.

Public Demonstration of Ether Anesthesia

Another New Englander, William Thomas Green Morton, briefly shared a dental practice with Wells in Hartford. Wells’ daybook shows that he gave Morton a course of instruction in anesthesia, but Morton apparently moved to Boston without paying for the lessons.19 In Boston, Morton continued his interest in anesthesia and sought instruction from chemist and physician Charles T. Jackson. After learning that ether dropped on the skin provided analgesia, he began experiments with inhaled ether, an agent that proved to be much more versatile than nitrous oxide. Bottles of liquid ether were easily transported, and the volatility of the drug permitted effective inhalation. The concentrations required for surgical anesthesia were so low that patients did not become hypoxic when breathing ether vaporized in air. It also possessed what would later be recognized as a unique property among all inhaled anesthetics: The quality of providing surgical anesthesia without causing respiratory depression. These properties, combined with a slow rate of induction, gave the patient a significant safety margin even in the hands of relatively unskilled anesthetists.20

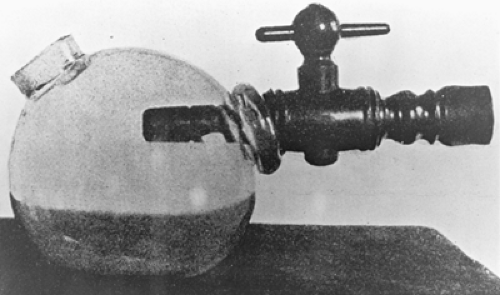

After anesthetizing a pet dog, Morton became confident of his skills and anesthetized patients with ether in his dental office. Encouraged by his success, Morton sought an invitation to give a public demonstration in the Bullfinch amphitheater of the Massachusetts General Hospital, the same site as Wells’ failed demonstration of the efficacy of nitrous oxide as a complete surgical anesthetic. Many details of the October 16, 1846, demonstration are well known. Morton secured permission to provide an anesthetic to Edward Gilbert Abbott, a patient of surgeon John Collins Warren. Warren planned to excise a vascular lesion from the left side of Abbott’s neck and was about to proceed when Morton arrived late. He had been delayed because he was obliged to wait for an instrument maker to complete a new inhaler (Fig. 1-1). It consisted of a large glass bulb containing a sponge soaked with oil of orange mixed with ether and a spout that was placed in the patient’s mouth. An opening on the opposite side of the bulb allowed air to enter and be drawn over the ether-soaked sponge with each breath.21

When the details of Morton’s anesthetic technique became public knowledge, the information was transmitted by train, stagecoach, and coastal vessels to other North American cities and by ship to the world. As ether was easy to prepare and administer, anesthetics were performed in Britain, France, Russia, South Africa, Australia, and other countries almost as soon as surgeons heard the welcome news of the extraordinary discovery. Even though surgery could now be performed with “pain put to sleep,” the frequency of operations did not rise rapidly, and several years would pass before anesthesia was universally recommended.

Chloroform and Obstetrics

James Young Simpson was a successful obstetrician of Edinburgh, Scotland, and among the first to use ether for the relief of labor pain. Dissatisfied with ether, Simpson soon sought a more pleasant, rapid-acting anesthetic. He and his junior associates conducted a bold search by inhaling samples of several volatile chemicals collected for Simpson by British apothecaries. David Waldie suggested chloroform, which had first been prepared in 1831. Simpson and his friends inhaled it after dinner at a party in Simpson’s home on the evening of November 4, 1847. They promptly fell unconscious and, when they awoke, were delighted with their success. Simpson quickly set about encouraging the use of chloroform. Within 2 weeks, he submitted his first account of its use for publication in The Lancet.

In the nineteenth century, the relief of obstetric pain had significant social ramifications and made anesthesia during childbirth a controversial subject. Simpson argued against the prevailing view, which held that relieving labor pain opposed God’s will. The pain of the parturient was viewed as both a component of punishment and a means of atonement for Original Sin. Less than a year after administering the first anesthesia during childbirth, Simpson addressed these concerns in a pamphlet entitled Answers to the Religious Objections Advanced Against the Employment of Anaesthetic Agents in Midwifery and Surgery and Obstetrics. In it, Simpson recognized the Book of Genesis as being the root of this sentiment and noted that God promised to relieve the

descendants of Adam and Eve of the curse. In addition, Simpson asserted that labor pain was a result of scientific and anatomic causes, and not the result of religious condemnation. He stated that the upright position of humans necessitated strong pelvic muscles to support the abdominal contents. As a result, he argued that the uterus necessarily developed strong musculature to overcome the resistance of the pelvic floor and that great contractile power caused great pain. Simpson’s pamphlet probably did not have a significant impact on the prevailing attitudes, but he did articulate many concepts that his contemporaries were debating at the time.23

descendants of Adam and Eve of the curse. In addition, Simpson asserted that labor pain was a result of scientific and anatomic causes, and not the result of religious condemnation. He stated that the upright position of humans necessitated strong pelvic muscles to support the abdominal contents. As a result, he argued that the uterus necessarily developed strong musculature to overcome the resistance of the pelvic floor and that great contractile power caused great pain. Simpson’s pamphlet probably did not have a significant impact on the prevailing attitudes, but he did articulate many concepts that his contemporaries were debating at the time.23

Chloroform gained considerable notoriety after John Snow used it to deliver the last two children of Queen Victoria. The Queen’s consort, Prince Albert, interviewed John Snow before he was called to Buckingham Palace to administer chloroform at the request of the Queen’s obstetrician. During the monarch’s labor, Snow gave analgesic doses of chloroform on a folded handkerchief. This technique was soon termed chloroform à la reine. Victoria abhorred the pain of childbirth and enjoyed the relief that chloroform provided. She wrote in her journal, “Dr. Snow gave that blessed chloroform and the effect was soothing, quieting, and delightful beyond measure”.24 When the Queen, as head of the Church of England, endorsed obstetric anesthesia, religious debate over the management of labor pain terminated abruptly.

John Snow, already a respected physician, took an interest in anesthetic practice and was soon invited to work with many leading surgeons of the day. In 1848, Snow introduced a chloroform inhaler. He had recognized the versatility of the new agent and came to prefer it in his practice. At the same time, he initiated what was to become an extraordinary series of experiments that were remarkable in their scope and for anticipating sophisticated research performed a century later. Snow realized that successful anesthetics should abolish pain and unwanted movements. He anesthetized several species of animals with varying strengths of ether and chloroform to determine the concentration required to prevent reflex movement from sharp stimuli. This work approximated the modern concept of minimum alveolar concentration.25 Snow assessed the anesthetic action of a large number of potential anesthetics but did not find any to rival chloroform or ether. His studies led him to recognize the relationship between solubility, vapor pressure, and anesthetic potency, which was not fully appreciated until after World War II. Snow published two remarkable books, On the Inhalation of the Vapour of Ether (1847) and On Chloroform and Other Anaesthetics (1858). The latter was almost completed when he died of a stroke at the age of 45 and was published posthumously.

Anesthesia Principles, Equipment, and Standards

Control of the Airway

Definitive control of the airway, a skill anesthesiologists now consider paramount, developed only after many harrowing and apneic episodes spurred the development of safer airway management techniques. Preceding tracheal intubation, however, several important techniques were proposed toward the end of the nineteenth century that remain integral to anesthesiology education and practice. Joseph Clover was the first Englishman to urge the now universal practice of thrusting the patient’s jaw forward to overcome obstruction of the upper airway by the tongue. Clover also published a landmark case report in 1877 in which he performed a surgical airway. Once his patient was asleep, Clover discovered that his patient had a tumor of the mouth that obstructed the airway completely, despite his trusted jaw-thrust maneuver. He averted disaster by inserting a small curved cannula of his design through the cricothyroid membrane. He continued anesthesia via the cannula until the tumor was excised. Clover, the model of the prepared anesthesiologist, remarked, “I have never used the cannula before although it has been my companion at some thousands of anaesthetic cases”.26

Tracheal Intubation

The development of techniques and instruments for tracheal intubation ranks among the major advances in the history of anesthesiology. The first tracheal tubes were developed for the resuscitation of drowning victims, but were not used in anesthesia until 1878. The first use of elective oral intubation for an anesthetic was undertaken by Scottish surgeon William Macewan. He had practiced passing flexible metal tubes through the larynx of a cadaver before attempting the maneuver on an awake patient with an oral tumor at the Glasgow Royal Infirmary on July 5, 1878.27 Since topical anesthesia was not yet known, the experience must have demanded fortitude on the part of Macewan’s patient. Once the tube was correctly positioned, an assistant began a chloroform–air anesthetic via the tube. Once anesthetized, the patient soon stopped coughing. Unfortunately, Macewan abandoned the practice following a fatality in which a patient had been successfully intubated while awake but the tube became dislodged once the patient was asleep. After the tube was removed, an attempt to provide chloroform by mask anesthesia was unsuccessful and the patient died.

An American surgeon named Joseph O’Dwyer is remembered for his extraordinary dedication to the advancement of tracheal intubation. In 1885, O’Dwyer designed a series of metal laryngeal tubes, which he inserted blindly between the vocal cords of children suffering a diphtheritic crisis. Three years later, O’Dwyer designed a second rigid tube with a conical tip that occluded the larynx so effectively that it could be used for artificial ventilation when applied with the bellows and T-piece tube designed by George Fell. The Fell–O’Dwyer apparatus, as it came to be known, was used during thoracic surgery by Rudolph Matas of New Orleans. Matas was so pleased with it that he predicted, “The procedure that promises the most benefit in preventing pulmonary collapse in operations on the chest is … the rhythmical maintenance of artificial respiration by a tube in the glottis directly connected with a bellows.”22

After O’Dwyer’s death, the outstanding pioneer of tracheal intubation was Franz Kuhn, a surgeon of Kassel, Germany. From 1900 until 1912, Kuhn published several articles and a classic monograph, “Die perorale Intubation,” which were not well known in his lifetime but have since become widely appreciated.25 His work might have had a more profound impact if it had been translated into English. Kuhn described techniques of oral and nasal intubation that he performed with flexible metal tubes composed of coiled tubing similar to those now used for the spout of metal gasoline cans. After applying cocaine to the airway, Kuhn introduced his tube over a curved metal stylet that he directed toward the larynx with his left index finger. While he was aware of the subglottic cuffs that had been used briefly by Victor Eisenmenger, Kuhn preferred to seal the larynx by positioning a supralaryngeal flange near the tube’s tip before packing the pharynx with gauze. Kuhn even monitored the patient’s breath sounds continuously through a monaural earpiece connected to an extension of the tracheal tube by a narrow tube.

Intubation of the trachea by palpation was an uncertain and sometimes traumatic act; surgeons even believed that it would be anatomically impossible to visualize the vocal cords directly. This misapprehension was overcome in 1895 by Alfred Kirstein in Berlin who devised the first direct-vision laryngoscope.28 Kirstein was motivated by a friend’s report that a patient’s trachea had been accidentally intubated during esophagoscopy. Kirstein promptly fabricated a handheld instrument that at first resembled a shortened cylindrical esophagoscope. He soon substituted a semicircular blade that opened inferiorly. Kirstein could now examine the larynx while standing behind his seated patient, whose head had been placed in an attitude approximating the currently termed “sniffing position.” Although Alfred Kirstein’s “autoscope” was not used by anesthesiologists, it was the forerunner of all modern laryngoscopes. Endoscopy was refined by Chevalier Jackson in Philadelphia, who designed a U-shaped laryngoscope by adding a handgrip that was parallel to the blade. The Jackson blade has remained a standard instrument for endoscopists but was not favored by anesthesiologists. Two laryngoscopes that closely resembled modern L-shaped instruments were designed in 1910 and 1913 by two American surgeons, Henry Janeway and George Dorrance, but neither instrument achieved lasting use despite their excellent designs.29

Before the introduction of muscle relaxants in the 1940s, intubation of the trachea could be challenging. This challenge was made somewhat easier, however, with the advent of laryngoscope blades specifically designed to increase visualization of the vocal cords. Robert Miller of San Antonio, Texas, and Robert Macintosh of Oxford University created their respectively named blades within an interval of 2 years. In 1941, Miller brought forward the slender, straight blade with a slight curve near the tip to ease the passage of the tube through the larynx. Although Miller’s blade was a refinement, the technique of its use was identical to that of earlier models as the epiglottis was lifted to expose the larynx.30

The Macintosh blade, which is placed in the vallecula rather than under the epiglottis, was invented as an incidental result of a tonsillectomy. Sir Robert Macintosh later described the circumstances of its discovery in an appreciation of the career of his technician, Mr. Richard Salt, who constructed the blade. As Sir Robert recalled, “A Boyle-Davis gag, a size larger than intended, was inserted for tonsillectomy, and when the mouth was fully opened the cords came into view. This was a surprise since conventional laryngoscopy, at that depth of anaesthesia, would have been impossible in those pre-relaxant days. Within a matter of hours, Salt had modified the blade of the Davis gag and attached a laryngoscope handle to it; and streamlined (after testing several models), the end result came into widespread use”.31 Macintosh underestimated the popularity of the blade, as more than 800,000 have been produced and many special-purpose versions have been marketed.

The most distinguished innovator in tracheal intubation was the self-trained British anesthetist Ivan (later, Sir Ivan) Magill.32 In 1919, while serving in the Royal Army as a general medical officer, Magill was assigned to a military hospital near London. Although he had only rudimentary training in anesthesia, Magill was obliged to accept an assignment to the anesthesia service, where he worked with another neophyte, Stanley Rowbotham.33 Together, Magill and Rowbotham attended casualties disfigured by severe facial injuries who underwent repeated restorative operations. These procedures required that the surgeon, Harold Gillies, have unrestricted access to the face and airway. These patients presented formidable challenges, but both Magill and Rowbotham became adept at tracheal intubation and quickly understood its current limitations. Because they learned from fortuitous observations, they soon extended the scope of tracheal anesthesia.

They gained expertise with blind nasal intubation after they learned to soften semirigid insufflation tubes for passage through the nostril. Even though their original intent was to position the tips of the nasal tubes in the posterior pharynx, the slender tubes frequently ended up in the trachea. Stimulated by this chance experience, they developed techniques of deliberate nasotracheal intubation. In 1920, Magill devised an aid to manipulating the catheter tip, the “Magill angulated forceps,” which continues to be manufactured according to his original design over 90 years ago.

With the war over, Magill entered civilian practice and set out to develop a wide-bore tube that would resist kinking but be conformable to the contours of the upper airway. While in a hardware store, he found mineralized red rubber tubing that he cut, beveled, and smoothed to produce tubes that clinicians around the world would come to call “Magill tubes.” His tubes remained the universal standard for more than 40 years until rubber products were supplanted by inert plastics. Magill also rediscovered the advantage of applying cocaine to the nasal mucosa, a technique that greatly facilitated awake blind nasal intubation.

In 1926, Arthur Guedel began a series of experiments that led to the introduction of the cuffed tube. Guedel transformed the basement of his Indianapolis home into a laboratory where he subjected each step of the preparation and application of his cuffs to a vigorous review.34 He fashioned cuffs from the rubber of dental dams, condoms, and surgical gloves that were glued onto the outer wall of tubes. Using animal tracheas donated by the family butcher as his model, he considered whether the cuff should be positioned above, below, or at the level of the vocal cords. He recommended that the cuff be positioned just below the vocal cords to seal the airway. Ralph Waters later recommended that cuffs be constructed of two layers of soft rubber cemented together. These detachable cuffs were first manufactured by Waters’ children, who sold them to the Foregger Company.

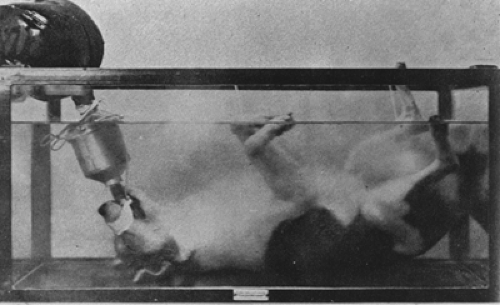

Guedel sought ways to show the safety and utility of the cuffed tube. He first filled the mouth of an anesthetized and intubated patient with water and showed that the cuff sealed the airway. Even though this exhibition was successful, he searched for a more dramatic technique to capture the attention of those unfamiliar with the advantages of intubation. He reasoned that if the cuff prevented water from entering the trachea of an intubated patient, it should also prevent an animal from drowning, even if it were submerged under water. To encourage physicians attending a medical convention to use his tracheal techniques, Guedel prepared the first of several “dunked dog” demonstrations (Fig. 1-2). An anesthetized and intubated dog, Guedel’s own pet, “Airway,” was immersed in an aquarium. After the demonstration was completed, the anesthetic

was discontinued before the animal was removed from the water. According to legend, Airway awoke promptly, shook water over the onlookers, saluted a post, then trotted from the hall to the applause of the audience.

was discontinued before the animal was removed from the water. According to legend, Airway awoke promptly, shook water over the onlookers, saluted a post, then trotted from the hall to the applause of the audience.

After a patient experienced an accidental endobronchial intubation, Ralph Waters reasoned that a very long cuffed tube could be used to isolate the lungs. The dependent lung could be ventilated while the upper lung was being resected.35 On learning of his friend’s success with intentional one-lung anesthesia, Arthur Guedel proposed an important modification for chest surgery, the double-cuffed single-lumen tube, which was introduced by Emery Rovenstine. These tubes were easily positioned, an advantage over bronchial blockers that had to be inserted by a skilled bronchoscopist. In 1953, single-lumen tubes were supplanted by double-lumen endobronchial tubes. The double-lumen tube currently most popular was designed by Frank Robertshaw of Manchester, England, and is prepared in both right- and left-sided versions. Robertshaw tubes were first manufactured from mineralized red rubber but are now made of extruded plastic, a technique refined by David Sheridan. Sheridan was also the first person to embed centimeter markings along the side of tracheal tubes, a safety feature that reduced the risk of the tube being incorrectly positioned.

Advanced Airway Devices

Conventional laryngoscopes proved inadequate for patients with “difficult airways.” A few clinicians credit harrowing intubating experiences as the incentive for invention. In 1928, a rigid bronchoscope was specifically designed for examination of the large airways. Rigid bronchoscopes were refined and used by pulmonologists. Although it was known in 1870 that a thread of glass could transmit light along its length, technologic limitations were not overcome until 1964 when Shigeto Ikeda developed the first flexible fiberoptic bronchoscope. Fiberoptic-assisted tracheal intubation has become a common approach in the management of patients with difficult airways having surgery.

Roger Bullard desired a device to simultaneously examine the larynx and intubate the vocal cords. He had been frustrated by failed attempts to visualize the larynx of a patient with Pierre-Robin syndrome. In response, he developed the Bullard laryngoscope, whose fiberoptic bundles lie beside a curved blade. Similarly, the Wu-scope was designed by Tzu-Lang Wu in 1994 to combine and facilitate visualization and intubation of the trachea in patients with difficult airways.36

Dr. A. I. J. “Archie” Brain first recognized the principle of the laryngeal mask airway (LMA) in 1981 when, like many British clinicians, he provided dental anesthesia via a Goldman nasal mask. However, unlike any before him, he realized that just as the dental mask could be fitted closely about the nose, a comparable mask attached to a wide-bore tube might be positioned around the larynx. He not only conceived of this radical departure in airway management, which he first described in 1983,37 but also spent years in single-handedly fabricating and testing several incremental modifications. Scores of Brain’s prototypes are displayed in the Royal Berkshire Hospital, Reading, England, where they provide a detailed record of the evolution of the LMA. He fabricated his first models from Magill tubes and Goldman masks, then refined their shape by performing postmortem studies of the hypopharynx to determine the form of cuff that would be most functional. Before silicone rubber was selected, Brain had even mastered the technique of forming masks from liquid latex. Every detail of the LMA, the number and position of the aperture bars and the shape and the size of the masks, required repeated modification.

Early Anesthesia Delivery Systems

the generous observation, “There is no restriction respecting the making of it”.39

Joseph Clover, another British physician, was the first anesthetist to administer chloroform in known concentrations through the “Clover bag.” He obtained a 4.5% concentration of chloroform in air by pumping a measured volume of air with a bellows through a warmed evaporating vessel containing a known volume of liquid chloroform.40 Although it was realized that nitrous oxide diluted in air often gave a hypoxic mixture and that the oxygen–nitrous oxide mixture was safer, Chicago surgeon Edmund Andrews complained about the physical limitations of delivering anesthesia to patients in their homes. The large bag was conspicuous and awkward to carry along busy streets. He observed that “In city practice, among the higher classes, however, this is no obstacle as the bag can always be taken in a carriage, without attracting attention”.41 In 1872, Andrews was delighted to report the availability of liquefied nitrous oxide compressed under 750 pounds of pressure, which allowed a supply sufficient for three patients to be carried in a single cylinder.

Critical to increasing patient safety was the development of a machine capable of delivering a calibrated amount of gas and volatile anesthetic. In the late nineteenth century, demands in dentistry instigated development of the first freestanding anesthesia machines. Three American dentist-entrepreneurs, Samuel S. White, Charles Teter, and Jay Heidbrink, developed the original series of US instruments that used compressed cylinders of nitrous oxide and oxygen. Before 1900, the S. S. White Company modified Frederick Hewitt’s apparatus and marketed its continuous-flow machine, which was refined by Teter in 1903. Heidbrink added reducing valves in 1912. In the same year, physicians initiated other important developments. Water–bubble flowmeters, introduced by Frederick Cotton and Walter Boothby of Harvard University, allowed the proportion of gases and their flow rate to be approximated. The Cotton and Boothby apparatus was transformed into a practical portable machine by James Tayloe Gwathmey of New York. The Gwathmey machine caught the attention of a London anesthetist Henry E. G. “Cockie” Boyle, who acknowledged his debt to the American when he incorporated Gwathmey’s concepts in the first of the series of “Boyle” machines that were marketed by Coxeter and British Oxygen Corporation. During the same period in Lubeck, Germany, Heinrich Draeger and his son, Bernhaard, adapted compressed gas technology, which they had originally developed for mine rescue equipment, to manufacture ether and chloroform–oxygen machines.

In the years after World War I, several US manufacturers continued to bring forward widely admired anesthesia machines. Richard von Foregger was an engineer who was exceptionally receptive to clinicians’ suggestions for additional features for his machines. Elmer McKesson became one of the country’s first specialists in anesthesiology in 1910 and developed a series of gas machines. In an era of flammable anesthetics, McKesson carried nitrous oxide anesthesia to its therapeutic limit by performing inductions with 100% nitrous oxide and thereafter adding small volumes of oxygen. If the resultant cyanosis became too profound, McKesson depressed a valve on his machine that flushed a small volume of oxygen into the circuit. Even though his techniques of primary and secondary saturation with nitrous oxide are no longer used, the oxygen flush valve is part of McKesson’s legacy.

Alternative Circuits

A valveless device, the Ayre’s T-piece, has found wide application in the management of intubated patients. Phillip Ayre practiced anesthesia in England when the limitations of equipment for pediatric patients produced what he described as “a protracted and sanguine battle between surgeon and anaesthetist, with the poor unfortunate baby as the battlefield”.42 In 1937, Ayre introduced his valveless T-piece to reduce the effort of breathing in neurosurgical patients. The T-piece soon became particularly popular for cleft palate repairs, as the surgeon had free access to the mouth. Positive-pressure ventilation could be achieved when the anesthetist obstructed the expiratory limb. In time, this ingenious, lightweight, non-rebreathing device evolved through more than 100 modifications for a variety of special situations. A significant alteration was Gordon Jackson Rees’ circuit, which permitted improved control of ventilation by substituting a breathing bag on the outflow limb.43 An alternative method to reduce the amount of equipment near the patient is provided by the coaxial circuit of the Bain–Spoerel apparatus.44 This lightweight tube-within-a-tube has served very well in many circumstances since its Canadian innovators described it in 1972.

Ventilators

Mechanical ventilators are now an integral part of the anesthesia machine. Patients are ventilated during general anesthesia by electrical or gas-powered devices that are simple to control yet sophisticated in their function. The history of mechanical positive-pressure ventilation began with attempts to resuscitate victims of drowning by a bellows attached to a mask or tracheal tube. These experiments found little role in anesthetic care for many years. At the beginning of the twentieth century, however, several modalities were explored before intermittent positive-pressure machines evolved.

A series of artificial environments were created in response to the frustration experienced by thoracic surgeons who found that the lung collapsed when they incised the pleura. Between 1900 and 1910, continuous positive- or negative-pressure devices were created to maintain inflation of the lungs of a spontaneously breathing patient once the chest was opened. Brauer (1904) and Murphy (1905) placed the patient’s head and neck in a box in which positive pressure was continually maintained. Sauerbruch (1904) created a negative-pressure operating chamber encompassing both the surgical team and the patient’s body and from which only the patient’s head projected.45

In 1907, the first intermittent positive-pressure device, the Draeger “Pulmotor,” was developed to rhythmically inflate the lungs. This instrument and later American models such as the E & J Resuscitator were used almost exclusively by firefighters and mine rescue workers. In 1934, a Swedish team developed the “Spiropulsator,” which C. Crafoord later modified for use during cyclopropane anesthesia.46 Its action was controlled by a magnetic control valve called the flasher, a type first used to provide intermittent gas flow for the lights of navigational buoys. When Trier Morch, a Danish anesthesiologist, could not obtain a Spiropulsator during World War II, he fabricated the Morch “Respirator,” which used a piston pump to rhythmically deliver a fixed volume of gas to the patient.45

A major stimulus to the development of ventilators came as a consequence of a devastating epidemic of poliomyelitis that struck Copenhagen, Denmark, in 1952. As scores of patients were admitted, the only effective ventilatory support that could be provided to patients with bulbar paralysis was continuous manual ventilation via a tracheostomy employing devices such as Waters’ “to-and-fro” circuit. This succeeded only through the dedicated efforts of hundreds of volunteers. Medical students served in relays to ventilate paralyzed patients. The Copenhagen crisis stimulated a broad European interest in the development of

portable ventilators in anticipation of another epidemic of poliomyelitis. At this time, the common practice in North American hospitals was to place polio patients with respiratory involvement in “iron lungs,” metal cylinders that encased the body below the neck. Inspiration was caused by intermittent negative pressure created by an electric motor acting on a piston-like device occupying the foot of the chamber.

portable ventilators in anticipation of another epidemic of poliomyelitis. At this time, the common practice in North American hospitals was to place polio patients with respiratory involvement in “iron lungs,” metal cylinders that encased the body below the neck. Inspiration was caused by intermittent negative pressure created by an electric motor acting on a piston-like device occupying the foot of the chamber.

Some early American ventilators were adaptations of respiratory-assist machines originally designed for the delivery of aerosolized drugs for respiratory therapy. Two types employed the Bennett or Bird “flow-sensitive” valves. The Bennett valve was designed during World War II when a team of physiologists at the University of Southern California encountered difficulties in separating inspiration from expiration in an experimental apparatus designed to provide positive-pressure breathing for aviators at high altitude. An engineer, Ray Bennett, visited their laboratory, observed their problem, and resolved it with a mechanical flow-sensitive automatic valve. A second valving mechanism was later designed by an aeronautical engineer, Forrest Bird.

The use of the Bird and Bennett valves gained anesthetic application when the gas flow from the valve was directed into a rigid plastic jar containing a breathing bag or bellows as part of an anesthesia circuit. These “bag-in-bottle” devices mimicked the action of the clinician’s hand as the gas flow compressed the bag, thereby providing positive-pressure inspiration. Passive exhalation was promoted by the descent of a weight on the bag or bellows.

Carbon Dioxide Absorption

Carbon dioxide (CO2) absorbance is a basic element of modern anesthetic machines. It was initially developed to allow rebreathing of gas and minimize loss of flammable gases into the room, thereby reducing the risk of explosion. In current practice, it permits decreased utilization of oxygen and anesthetic, thus reducing cost. The first CO2 absorber in anesthesia came in 1906 from the work of Franz Kuhn, a German surgeon. His use of canisters developed for mine rescues by Draeger was innovative, but his circuit had unfortunate limitations. The exceptionally narrow breathing tubes and a large dead space explain its very limited use, and Kuhn’s device was ignored.

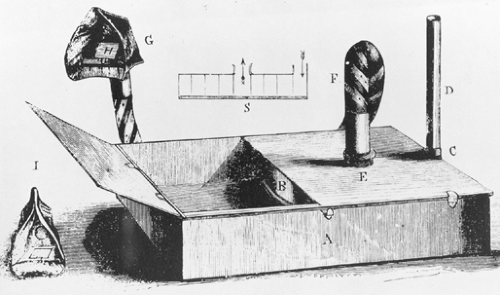

A few years later, the first American machine with a CO2 absorber was independently fabricated by a pharmacologist named Dennis Jackson. In 1915, Jackson developed an early technique of CO2 absorption that permitted the use of a closed anesthesia circuit. He used solutions of sodium and calcium hydroxide to absorb CO2. As his laboratory was located in an area of St. Louis, Missouri, heavily laden with coal smoke, Jackson reported that the apparatus allowed him the first breaths of absolutely fresh air he had ever enjoyed in that city. The complexity of Jackson’s apparatus limited its use in hospital practice, but his pioneering work in this field encouraged Ralph Waters to introduce a simpler device using soda lime granules 9 years later. Waters positioned a soda lime canister (Fig. 1-4) between a face mask and an adjacent breathing bag to which was attached the fresh gas flow. As long as the mask was held against the face, only small volumes of fresh gas flow were required and no valves were needed.47

Waters’ device featured awkward positioning of the canister close to the patient’s face. Brian Sword overcame this limitation in 1930 with a freestanding machine with unidirectional valves to create a circle system and an in-line CO2 absorber.48 James Elam and his coworkers at the Roswell Park Cancer Institute in Buffalo, New York, further refined the CO2 absorber, increasing the efficiency of CO2 removal with a minimum of resistance for breathing.49 Consequently, the circle system introduced by Sword in the 1930s, with a few refinements, became the standard anesthesia circuit in North America.

Flowmeters

As closed and semiclosed circuits became practical, gas flow could be measured with greater accuracy. Bubble flowmeters were replaced with dry bobbins or ball-bearing flowmeters, which, although they did not leak fluids, could cause inaccurate measurements if they adhered to the glass column. In 1910, M. Neu had been the first to apply rotameters in anesthesia for the administration of nitrous oxide and oxygen, but his machine was not a commercial success, perhaps because of the great cost of nitrous oxide in Germany at that time. Rotameters designed for use in German industry were first employed in Britain in 1937 by Richard Salt; but as World War II approached, the English were denied access to these sophisticated flowmeters. After World War II rotameters became regularly employed in British anesthesia machines, although most American equipment still featured nonrotating floats. The now universal practice of displaying gas flow in liters per minute was not a customary part of all American machines until more than a decade after World War II.

Vaporizers

The art of a smooth induction with a potent anesthetic was a great challenge, particularly if the inspired concentration could not be determined with accuracy. Even the clinical introduction of halothane after 1956 might have been thwarted except for a fortunate coincidence: The prior development of calibrated vaporizers. Two types of calibrated vaporizers designed for other anesthetics had become available in the half decade before halothane was marketed. The prompt acceptance of halothane was in part because of an ability to provide it in carefully titrated concentrations.

The Copper Kettle was the first temperature-compensated, accurate vaporizer. It had been developed by Lucien Morris at the University of Wisconsin in response to Ralph Waters’ plan to test chloroform by giving it in controlled concentrations.50 Morris achieved this goal by passing a metered flow of oxygen through a vaporizer chamber that contained a sintered bronze disk to separate the oxygen into minute bubbles. The gas became fully saturated with anesthetic vapor as it percolated through the liquid. The concentration of the anesthetic inspired by the patient could be calculated by knowing the vapor pressure of the liquid anesthetic, the volume of oxygen flowing through the liquid, and the total volume of gases from all sources entering the anesthesia circuit. Although experimental models of Morris’ vaporizer used a water bath to maintain stability, the excellent thermal conductivity of copper, especially when the device was attached to a metal anesthetic machine, was substituted in later models. When first marketed, the Copper Kettle did not feature a mechanism to indicate changes in the temperature (and vapor pressure) of the liquid. Shuh-Hsun Ngai proposed the incorporation of a thermometer, a suggestion that was later added to all vaporizers of that class.51 The Copper Kettle (Foregger Company) and the Vernitrol (Ohio Medical Products) were universal vaporizers that could be charged with any anesthetic liquid, and, provided that its vapor pressure and temperature were known, the inspired concentration could be calculated quickly.

When halothane was first marketed in Britain, an effective temperature-compensated, agent-specific vaporizer had recently been placed in clinical use. The TECOTA (TEmperature COmpensated Trichloroethylene Air) vaporizer featured a bimetallic strip composed of brass and a nickel–steel alloy, two metals with different coefficients of expansion. As the anesthetic vapor cooled, the strip bent to move away from the orifice, thereby permitting more fresh gas to enter the vaporizing chamber. This maintained a constant inspired concentration despite changes in temperature and vapor pressure. After their TECOTA vaporizer was accepted into anesthetic practice, the technology was used to create the “Fluotec,” the first of a series of agent-specific “tec” vaporizers for use in the operating room.

Patient Monitors

In many ways, the history of late-nineteenth and early-twentieth century anesthesiology is the quest for the safest anesthetic. The discovery and widespread use of electrocardiography, pulse oximetry, blood gas analysis, capnography, and neuromuscular blockade monitoring have reduced patient morbidity and mortality and revolutionized anesthesia practice. While safer machines assured clinicians that appropriate gas mixtures were delivered to the patient, monitors provided an early warning of acute physiologic deterioration before patients suffered irrevocable damage.

Joseph Clover was one of the first clinicians to routinely perform basic hemodynamic monitoring. Clover developed the habit of monitoring his patients’ pulse but surprisingly, this was a contentious issue at the time. Prominent Scottish surgeons scorned Clover’s emphasis on the action of chloroform on the heart. Baron Lister and others preferred that senior medical students give anesthetics and urged them to “strictly carry out certain simple instructions, among which is that of never touching the pulse, in order that their attention may not be distracted from the respiration”.52 Lister also counseled, “it appears that preliminary examination of the chest, often considered indispensable, is quite unnecessary, and more likely to induce the dreaded syncope, by alarming the patients, than to avert it”.53 Little progress in anesthesia could come from such reactionary statements. In contrast, Clover had observed the effect of chloroform on animals and urged other anesthetists to monitor the pulse at all times and to discontinue the anesthetic temporarily if any irregularity or weakness was observed in the strength of the pulse.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree