208 Quality and Patient Safety in Emergency Medicine

• The great variability in local and regional clinical practice patterns indicates that the U.S. population does not consistently receive high-quality health care. Problems with overuse, underuse, and misuse of health care resources have been documented.

• Two watershed Institute of Medicine reports brought the quality problems in health care to center stage. These reports championed that health care should be safe, effective, patient-centered, timely, efficient, and equitable.

• The greatest sources of quality problems in health care are not “bad apples” (i.e., incompetent providers), but rather “bad systems,” specifically systems that promote, or at least do not mitigate, predictable human errors.

• Medical errors and adverse events are caused by both active and latent failures, as well as factors (e.g., error-producing conditions or reliance on heuristics) that lead to cognitive errors (e.g., slips, lapses, premature closure). Diagnostic errors are a leading cause of patient safety issues in emergency medicine. High-reliability organizations are preoccupied with failures and serve as models for health care.

• Both public and private sectors have a strong movement away from purchasing health care services by volume and toward purchasing value (defined as health care value per dollar spent). Future reimbursement models will inevitably center on performance measurement and accountability, with a likely shift in level of financial risk borne from payer to provider.

History of Health Care Quality

Efforts to assess quality in health care extend back to Dr. Ernest A. Codman, an early twentieth-century surgeon at Massachusetts General Hospital in Boston. Codman was the first to advocate for the tracking and public reporting of “end results” of surgical procedures and initiated the first morbidity and mortality conferences.1 Such public information would allow patients to choose among surgeons and surgeons to learn from better performers. Codman was clearly ahead of his time. The medical establishment resisted having their “results” measured and publicized, and Codman was accordingly ostracized by his peers. In 1913, however, the American College of Surgeons adopted Codman’s proposal of an “end result system of hospital standardization” and went on to develop the Minimum Standard for Hospitals. These efforts led to the formation of the forerunner of today’s Joint Commission (JC) in the 1950s. The JC’s accreditation process, local hospital quality reviews, professional boards, and other systems that developed allowed the medical profession and hospitals to judge the quality of their work and be held accountable only to themselves for most of the twentieth century.

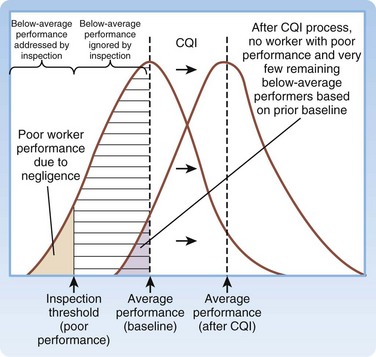

The academic science of quality management in health care is credited to Dr. Avedis Donabedian’s efforts in the 1980s. Donabedian advocated for evaluating health care quality through assessment of structure (e.g., physical plant, personnel, policies and procedures), process (how things are done), and outcome (final results).2 His ideas were first adopted in public health and later spread to administration and management. Shortly thereafter, Dr. Donald Berwick, a Harvard Medical School (Boston) pediatrician, building on a systems approach to quality management, introduced the theory of continuous quality improvement into the medical literature. In his landmark New England Journal of Medicine article,3 Berwick remarked the that the “Theory of Bad Apples” relies on inspection to improve quality (i.e., find and remove the bad apples from the lot). In health care, those who subscribe to this theory seek outliers (deficient health care workers who need to be punished) and advocate a blame and shame culture through reprimand in settings such as morbidity and mortality conferences. The “Theory of Continuous Improvement,” however, focuses on the average worker, not the outlier, and on systems problems, rather than an individual’s failure.

Examples of bad systems abound in medicine. One example provided by Berwick involves the reported deaths resulting from an inadvertent mix-up of racemic epinephrine and vitamin E.4 Newborns in a nursery received the epinephrine instead of the vitamin in their nasogastric tubes. If presented as the mix-up of a benign medication for a potentially toxic one, it is viewed as appalling, and blaming individual negligent behavior is easy. Yet when one notes that the two bottles were nearly identical, one can understand why the system “is perfectly designed to kill babies by ensuring a specific—low but inevitable—rate of mixups.” The Theory of Continuous Improvement suggests that quality can be improved by improving the knowledge, practices, and engagement of the average worker and by improving the systems environment in which they work. The “immense, irresistible quantitative power derived from shifting the entire curve of production upward even slightly, as compared with a focus on trimming the tails” is what makes a systems focus so attractive (Fig. 208.1).

Although Berwick and others were publishing new studies critiquing health care’s approach to quality and safety throughout the 1980s and 1990s, not until the publication of two reports by the Institute of Medicine (IOM) did quality and safety capture the public’s attention: To Err is Human: Building a Safer Health System,5 in 1999, and Crossing the Quality Chasm: A New Health System for the 21st Century,6 in 2001.

To Err is Human focused the attention of the U.S. public on patient safety and medical errors in health care. The report’s most famous sound bite, that medical errors result in the deaths of two jumbo jetliners full of patients in U.S. hospitals each day, gained traction with lawmakers, employers, and patient advocacy groups. This estimate, that 44,000 to 98,000 deaths occur per year in the United States as a result of medical error, would make hospital-based errors the eighth leading cause of death in the United States, ahead of breast cancer, acquired immunodeficiency syndrome, and motor vehicle crashes. These statistics were derived from two large retrospective studies. In the Harvard Medical Practice Study,7 nurses and physicians reviewed more than 30,000 hospital records and found that adverse events occurred in 3.7% of hospitalizations. Of these adverse events, 13.6% were fatal, and 27.6% were caused by negligence. The Colorado-Utah study8 reviewed 15,000 hospital records and found that adverse events occurred in 2.9% of hospitalizations. Negligent adverse events accounted for 27.4% of total adverse events in Utah and for 32.6% of those in Colorado. Of these negligent adverse events, 8.8% were fatal. Extrapolation of results from these two studies provided the upper and lower limits for deaths associated with medical errors for the IOM report. In both studies, compared with other areas of the hospital, the emergency department (ED) had the highest proportion of adverse events resulting from negligence.

Crossing the Quality Chasm focused more broadly on redesign of the health care delivery system to improve the quality of care. The report begins by stating: “The American health care delivery system is in need of fundamental change. … Between the health care we have and the care we could have lies not just a gap, but a chasm.” The report goes on to detail that this chasm exists for preventive, acute, and chronic care and reflects overuse (provision of services when potential for harm exceeds potential for benefit), underuse (failure to provide services when potential for harm exceeds potential for benefit), and misuse (provision of appropriate services, complicated by a preventable error, such that the patient does not receive full benefit). The report first defined six domains for classifying quality improvement for the health care system (Table 208.1). Together, the IOM reports solidified a key concept—quality problems are generally not the result of bad apples but of bad systems. In fact, well-intentioned, hard-working people are routinely defeated by bad systems, regardless of training, competence, and vigilance.

Table 208.1 Institute of Medicine’s Six Aims for Quality Improvement

| AIM | DEFINITION |

|---|---|

| Health Care Should Be: | |

| Safe | “Avoiding injuries to patients from care that is intended to help” |

| Effective | “Providing services based on scientific knowledge (avoiding underuse and overuse)” |

| Patient centered | “Providing care that is respectful of and responsive to individual patient preferences, needs, and values and ensuring that patient values guide all clinical decisions” |

| Timely | “Reducing waits and sometimes harmful delays for both those who receive and those who give care” |

| Efficient | “Avoiding waste, in particular waste of equipment, supplies, ideas, and energy” |

| Equitable | “Providing care that does not vary in quality because of personal characteristics such as gender, ethnicity, geographic location, and socioeconomic status” |

Adapted from Committee on Quality Health Care in America. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: National Academies Press; 2001.

Costs in Health Care

In 2009, the United States spent $2.5 trillion, or $8000 per person, on health care.9 Although we spend well over twice as much per capita on health care than any other industrialized country, we repeatedly fare poorly when compared with other systems on quality and outcomes.10 Half of the U.S. population admits to being very worried about paying for health care or health care insurance.11 One in four persons in the United States reports that his or her family has had problems paying for medical care during the past year.12 Close to half of all bankruptcy filings are partly the result of medical expenses.13 U.S. businesses similarly feel shackled by health care costs, with reports of companies spending more on health care than on supplies for their main products. In Massachusetts, annual health care costs in school budgets outpaced state aid for schools and forced schools to make spending cuts in books and teacher training.14 The increased cost of U.S. health care threatens the ability of our society to pay for other needed and wanted services.

Variability in Health Care

Although the number of errors coupled with increasing health care costs paints a negative picture, the variability of care is both more concerning and a source for optimism. Dr. John (Jack) E. Wennberg’s work in regional variability has illustrated the differences in cost, quality, and outcomes of U.S. health care since the 1970s. In 1973, Wennberg and Gittelsohn15 first identified the extreme degree of variability that exists in clinical practice by documenting that 70% of women in one Maine county had hysterectomies by the age of 70 years, as compared with 20% in a nearby county with similar demographics. Not surprisingly, the rate of hysterectomy was directly proportional to the physical proximity of a gynecologist. Similarly, residents of New Haven, Connecticut are twice as likely to undergo coronary artery bypass grafting (CABG), whereas patients in Boston are twice as likely to undergo carotid endarterectomy, even though both cities are the home of top-rated academic medical centers.16

Dr. Wennberg’s work in regional variability led to the creation in the 1990s of the Dartmouth Atlas Project, which maps health care use and outcomes for every geographic region in the United States, and details care down to the referral region of each hospital. Atlas researchers, notably Dr. Elliott Fisher et al.,17,18 have looked at the pattern of health care delivery and have found no clear association between the volume, intensity, or cost of care and patient outcomes. According to the Dartmouth Atlas Project, patients in areas of higher spending receive 60% more care, but the quality of care in those regions is no better, and at times worse, when key quality measures are compared.17,18 More widely reported in the lay press was the finding that Medicare spends twice the national average per enrollee in McAllen, Texas than it does any other part of the country, including other cities in Texas, without better-quality outcomes.19 The increased expense comes from more testing, more hospitalizations, more surgical procedures, and more home care. Variability in practice pattern, however, is not limited to overuse, but also includes underuse. McGlynn et al.20 found that, in aggregate, the U.S. population receives only 55% of recommended treatments, regardless of whether preventive, acute, or chronic care is examined.

The findings of the Dartmouth Atlas Project have frequently been cited as a rationale for health care reform law. The Project’s underlying methodology, however, has been scrutinized. Some investigators have questioned whether it appropriately adjusts for the costs of medical practice in different regions. Others have pointed out the limitations of focusing analysis on health care costs incurred by patients over the 2 years before their deaths and attributing these costs to the hospital most frequently visited.21 A study by Romley et al.22 reported an inverse relationship between hospital spending and inpatient mortality. Although great variability clearly exists in clinical practice, what is not clear is how to translate maps of geographic variation into health care policies that improve quality and contain costs.

Institute of Medicine Aim: Patient Safety

Echoing the ancient axiom of medicine, Primum non nocere, or “First, do no harm,” patients expect not to be harmed by the very care that is intended to help them. As such, patient safety is the most fundamental of the IOM’s six domains. Tables 208.2 and 208.3 provide the IOM’s Patient Safety and Adverse Event Nomenclature.

Table 208.2 Institute of Medicine’s Patient Safety and Adverse Event Nomenclature

| TERM | DEFINITION |

|---|---|

| Safety | Freedom from accidental injury |

| Patient safety | Freedom from accidental injury; involves the establishment of operational systems and processes that minimize the possibility of error and maximize the probability of intercepting errors when they occur |

| Accident | An event that damages a system and disrupts the ongoing or future output of the system |

| Error | The failure of a planned action to be completed as intended or the use of a wrong plan to achieve an aim |

| Adverse event | An injury caused by medical management rather than by the underlying disease or condition of the patient |

| Preventable adverse event | Adverse event attributable to error |

| Negligent adverse event | A subset of adverse event meeting the legal criteria for negligence |

| Adverse medication event | Adverse event resulting from a medication or pharmacotherapy |

| Active error | Error that occurs at the front line and whose effects are felt immediately |

| Latent error | Error in design, organization, training, or maintenance that is often caused by management or senior-level decisions; when expressed, these errors result in operator errors but may have been hidden, dormant in the system for lengthy periods before their appearance |

Adapted from Kohn LT, Corrigan J, Donaldson MS, McKenzie D. To err is human: building a safer health system. Washington, DC: National Academies Press; 2000.

Table 208.3 Active and Latent Failure Types

From Aghababian R, editor. Essentials of emergency care. 2nd ed. Sudbury, Mass: Jones and Bartlett; 2006.

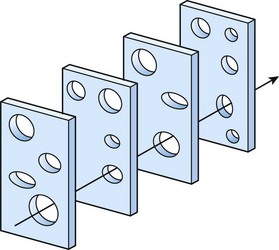

Reason’s “Swiss Cheese” Model

Described by James Reason in 1990,23 the “Swiss cheese” model of human error has four levels of human failure useful to evaluating a medical error (Fig. 208.2). The first level depicts active failures, which are the unsafe acts of the operator that ultimately led to the error. The next three levels depict latent failures (preconditions for unsafe acts, unsafe supervision, and organizational influence), which are underlying holes (or hazards) that allow errors to pass through to the sharp end. As with slices of Swiss cheese, when the levels of error are not aligned, an active failure can be caught before it causes harm—a near miss. With the right alignment, however, patients can be harmed by predictable human errors when systems are not appropriately designed to protect them.