Historical Perspective on the Development of Mechanical Ventilation: Introduction

The history of mechanical ventilation is intimately intertwined with the history of anatomy, chemistry, and physiology; exploration under water and in the air; and of course, modern medicine. Anatomists described the structural connections of the lungs to the heart and vasculature and developed the earliest insights into the functional relationships of these organs. They emphasized the role of the lungs in bringing air into the body and probably expelling waste products, but showed little understanding of how air was used by the body. Chemists defined the constituents of air and explained the metabolic processes by which the cells used oxygen and produced carbon dioxide. Physiologists complemented these studies by exploring the relationships between levels of oxygen and carbon dioxide in the blood and ventilation. Explorers tested the true limits of physiology. Travel in the air and under water exposed humans to extremes in ventilatory demands and prompted the development of mechanical adjuncts to ventilation. Following the various historical threads provided by the anatomists, chemists, physiologists, and explorers provides a useful perspective on the tapestry of a technique modern physicians accept casually: mechanical ventilation.

Anatomists of the Heart and Lungs

Early Greek physicians endorsed Empedocles’ view that all matter was composed of four essential elements: earth, air, fire, and water. Each of these elements had primary qualities of heat, cold, moisture, and dryness.1 Empedocles applied this global philosophic view to the human body by stating that “innate heat,” or the soul, was distributed from the heart via the blood to various parts of the body.

The Hippocratic corpus stated that the purpose of respiration was to cool the heart. Air was thought to be pumped by the atria from the lungs to the right ventricle via the pulmonary artery and to the left ventricle through the pulmonary vein.2 Aristotle believed that blood was an indispensable part of animals but that blood was found only in veins. Arteries, in contrast, contained only air. This conclusion probably was based on his methods of sacrificing animals. The animals were starved, to better define their vessels, and then strangled. During strangulation, blood pools in the right side of the heart and venous circulation, leaving the left side of the heart and arteries empty.2 Aristotle described a three-chamber heart connected with passages leading in the direction of the lung, but these connections were minute and indiscernible.3 Presumably, the lungs cooled the blood and somehow supplied it with air.4

Erasistratus (born around 300 BC) believed that air taken in by the lungs was transferred via the pulmonary artery to the left ventricle. Within the left ventricle, air was transformed into pneuma zotikon, or the “vital spirit,” and was distributed through air-filled arteries to various parts of the body. The pneuma zotikon carried to the brain was secondarily changed to the pneuma psychikon (“animal spirit”). This animal spirit was transmitted to the muscles by the hollow nerves. Erasistratus understood that the right ventricle facilitated venous return by suction during diastole and that venous valves allowed only one-way flow of blood.1

The Greek physician Claudius Galen, practicing in Rome around AD 161, demonstrated that arteries contain blood by inserting a tube into the femoral artery of a dog.5,6 Blood flow through the tube could be controlled by adjusting tension on a ligature placed around the proximal portion of the artery. He described a four-chamber heart with auricles distinct from the right and left ventricles. Galen also believed that the “power of pulsation has its origin in the heart itself” and that the “power [to contract and dilate] belongs by nature to the heart and is infused into the arteries from it.”5,6 He described valves in the heart and, as did Erasistratus, recognized their essential importance in preventing the backward discharge of blood from the heart. He alluded several times to blood flowing, for example, from the body through the vena cava into the right ventricle and even made the remarkable statement that “in the entire body the arteries come together with the veins and exchange air and blood through extremely fine invisible orifices.”6 Furthermore, Galen believed that “fuliginous wastes” were somehow discharged from the blood through the lung.6 Galen’s appreciation that the lungs supplied some property of air to the body and discharged a waste product from the blood was the first true insight into the lung’s role in ventilation. However, he failed in two critical ways to appreciate the true interaction of the heart and lungs. First, he believed, as did Aristotle and other earlier Greeks, that the left ventricle is the source of the innate heat that vitalizes the animal. Respiration in animals exists for the sake of the heart, which requires the substance of air to cool it. Expansion of the lung caused the lightest substance, that is, the outside air, to rush in and fill the bronchi. Galen provided no insight, though, into how air, or pneuma, might be drawn out from the bronchi and lungs into the heart. Second, he did not clearly describe the true circular nature of blood flow from the right ventricle through the lungs and into the left ventricle and then back to the right ventricle. His writings left the serious misconception that blood was somehow transported directly from the right to the left ventricle through the interventricular septum.1,5,6

Byzantine and Arab scholars maintained Galen’s legacy during the Dark Ages and provided a foundation for the rebirth of science during the Renaissance.1,6,7 Around 1550, Vesalius corrected many inaccuracies in Galen’s work and even questioned Galen’s concept of blood flow from the right ventricle to the left ventricle. He was skeptical about the flow of blood through the interventricular pores Galen described.1,6,8 Servetus, a fellow student of Vesalius in Paris, suggested that the vital spirit is elaborated both by the force of heat from the left ventricle and by a change in color of the blood to reddish yellow. This change in color “is generated in the lungs from a mixture of inspired air with elaborated subtle blood which the right ventricle of the heart communicates to the left. This communication, however, is made not through the middle wall of the heart, as is commonly believed, but by a very ingenious arrangement: the subtle blood courses through the lungs from the pulmonary artery to pulmonary vein, where it changes color. During this passage the blood is mixed with inspired air and through expiration it is cleansed of its sooty vapors. This mixture, suitably prepared for the production of the vital spirit, is drawn onward to the left ventricle of the heart by diastole.”6,9 Although Servetus’ views proved ultimately to be correct, they were considered heretical at the time, and he was subsequently burned at the stake, along with most copies of his book, in 1553.

Columbus, a dissectionist to Vesalius at Padua, in 1559 suggested that blood travels to the lungs via the pulmonary artery and then, along with air, is taken to the left ventricle through the pulmonary vein. He further advanced the concept of circulation by noting that the left ventricle distributes blood to the body through the aorta, blood returns to the right ventricle in the vena cava, and venous valves in the heart allow only one-way flow.1,6,10

These views clearly influenced William Harvey, who studied anatomy with Fabricius in Padua from 1600 to 1602. Harvey set out to investigate the “true movement, pulse, action, use and usefulness of the heart and arteries.” He questioned why the left ventricle and right ventricle traditionally were felt to play such fundamentally different roles. If the right ventricle existed simply to nourish the lungs, why was its structure so similar to that of the left ventricle? Furthermore, when one directly observed the beating heart in animals, it was clear that the function of both right and left ventricles also was similar. In both cases, when the ventricle contracted, it expelled blood, and when it relaxed, it received blood. Cardiac systole coincided with arterial pulsations. The motion of the auricles preceded that of the ventricles. Indeed, the motions are consecutive with a rhythm about them, the auricles contracting and forcing blood into the ventricles and the ventricles, in turn, contracting and forcing blood into the arteries. “Since blood is constantly sent from the right ventricle into the lungs through the pulmonary artery and likewise constantly is drawn the left ventricle from the lungs…it cannot do otherwise than flow through continuously. This flow must occur by way of tiny pores and vascular openings through the lungs. Thus, the right ventricle may be said to be made for the sake of transmitting blood through the lungs, not for nourishing them.”6,11

Harvey described blood flow through the body as being circular. This was easily understood if one considered the quantity of blood pumped by the heart. If the heart pumped 1 to 2 drams of blood per beat and beat 1000 times per half-hour, it put out almost 2000 drams in this short time. This was more blood than was contained in the whole body. Clearly, the body could not produce amounts of blood fast enough to supply these needs. Where else could all the blood go but around and around “like a stage army in an opera.” If this theory were correct, Harvey went on to say, then blood must be only a carrier of critical nutrients for the body. Presumably, the problem of the elimination of waste vapors from the lungs also was explained by the idea of blood as the carrier.1,6,11

With Harvey’s remarkable insights, the relationship between the lungs and the heart and the role of blood were finally understood. Only two steps remained for the anatomists to resolve. First, the nature of the tiny pores and vascular openings through the lungs had to be explained. About 1650, Malpighi, working with early microscopes, found that air passes via the trachea and bronchi into and out of microscopic saccules with no clear connection to the bloodstream. He further described capillaries: “… and such is the wandering about of these vessels as they proceed on this side from the vein and on the other side from the artery, that the vessels no longer maintain a straight direction, but there appears a network made up of the articulations of the two vessels…blood flowed away along [these] tortuous vessels … always contained within tubules.”1,6 Second, Borelli, a mathematician in Pisa and a friend of Malpighi, suggested the concept of diffusion. Air dissolved in liquids could pass through membranes without pores. Air and blood finally had been linked in a plausible manner.1

Chemists and Physiologists of the Air and Blood

The anatomists had identified an entirely new set of problems for chemists and physiologists to consider. The right ventricle pumped blood through the pulmonary artery to the lungs. In the lungs the blood took up some substance, evidenced by the change in color observed as blood passes through the pulmonary circulation. Presumably the blood released “fuliginous wastes” into the lung. The site of this exchange was thought to be at the alveolar–capillary interface, and it probably occurred by the process of diffusion. What were the substances exchanged between blood and air in the lung? What changed the color of blood and was essential for the production of the “innate heat”? What was the process by which “innate heat” was produced, and where did this combustion occur, in the left ventricle as supposed from the earliest Greek physician-philosophers or elsewhere? Where were the “fuliginous wastes” produced, and were they in any way related to the production of “innate heat”? If blood were a carrier, pumped by the left ventricle to the body, what was it carrying to the tissues and then again back to the heart?

Von Helmont, about 1620, added acid to limestone and potash and collected the “air” liberated by the chemical reaction. This “air” extinguished a flame and seemed to be similar to the gas produced by fermentation. This “air” also appeared to be the same gas as that found in the Grotto del Cane. This grotto was notorious for containing air that would kill dogs but spare their taller masters.1 The gas, of course, was carbon dioxide. In the late seventeenth century, Boyle recognized that there is some substance in air that is necessary to keep a flame burning and an animal alive. Place a flame in a bell jar, and the flame eventually will go out. Place an animal in such a chamber, and the animal eventually will die. If another animal is placed in that same chamber soon thereafter, it will die suddenly. Mayow showed, around 1670, that enclosing a mouse in a bell jar resulted eventually in the mouse’s death. If the bell jar were covered by a moistened bladder, the bladder bulged inward when the mouse died. Obviously, the animals needed something in air for survival. Mayow called this the “nitro-aereal spirit,” and when it was depleted, the animals died.1,12 This gas proved to be oxygen. Boyle’s suspicions that air had other qualities primarily owing to its ingredients seemed well founded.13,14

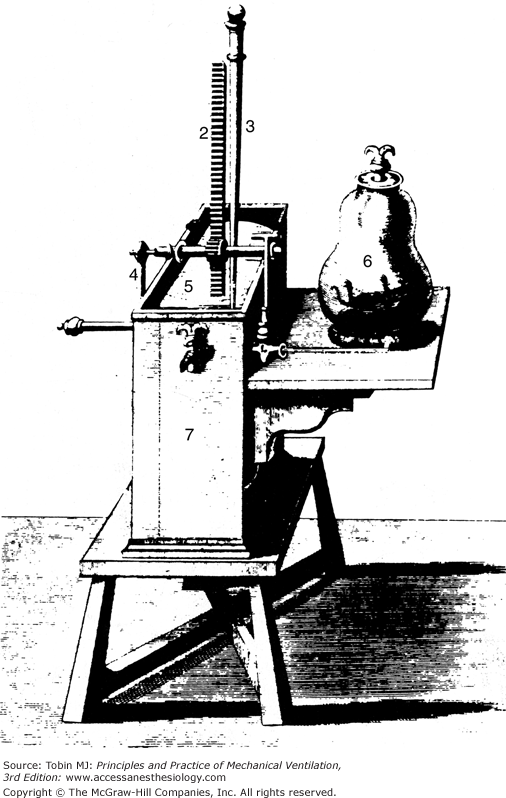

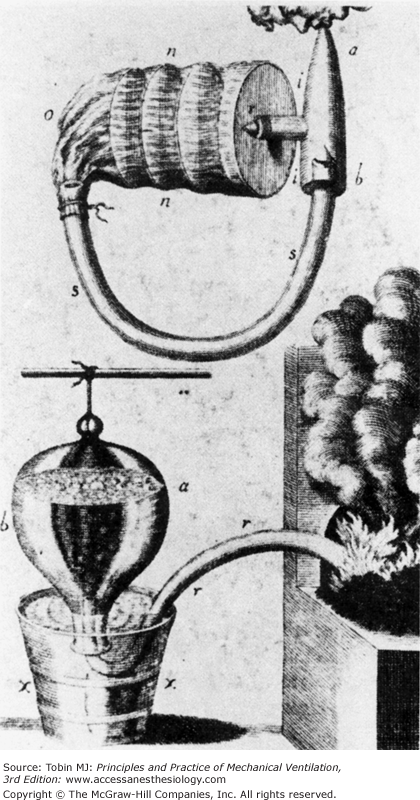

In a remarkable and probably entirely intuitive insight, Mayow suggested that the ingredient essential for life, the “nitro-aereal spirit,” was taken up by the blood and formed the basis of muscular contraction. Evidence supporting this concept came indirectly. In the early 1600s, the concept of air pressure was first understood. von Guericke invented a pneumatic machine that reduced air pressure.1,15 Robert Boyle later devised the pneumatic pump that could extract air from a closed vessel to produce something approaching a vacuum (Fig. 1-1). Boyle and Hooke used this pneumatic engine to study animals under low-pressure conditions. Apparently Hooke favored dramatic experiments, and he often demonstrated in front of crowds that small animals died after air was evacuated from the chamber. Hooke actually built a human-sized chamber in 1671 and volunteered to enter it. Fortunately, the pump effectively removed only about a quarter of the air, and Hooke survived.16 Boyle believed that the difficulty encountered in breathing under these conditions was caused solely by the loss of elasticity in the air. He went on to observe, however, that animal blood bubbled when placed in a vacuum. This observation clearly showed that blood contained a gas of some type.13,14 In 1727, Hales introduced the pneumatic trough (Fig. 1-2). With this device he was able to distinguish between free gas and gas no longer in its elastic state but combined with a liquid.1 The basis for blood gas machines had been invented.

Figure 1-1

A pneumatical engine, or vacuum pump, devised by Hooke in collaboration with Boyle around 1660. The jar (6) contains an animal in this illustration. Pressure is lowered in the jar by raising the tightly fitting slide (5) with the crank (4). (Used, with permission, from Graubard.6)

Figure 1-2

In 1727, Hales developed the pneumatic trough, shown on the bottom of this illustration. This device enabled him to collect gases produced by heating. On the top is a closed-circuit respiratory apparatus for inhaling the collected gases. (Used, with permission, from Perkins.1)

The first constituent of air to be truly recognized was carbon dioxide. Joseph Black, around 1754, found that limestone was transformed into caustic lime and lost weight on being heated. The weight loss occurred because a gas was liberated during the heating process. The same results occurred when the carbonates of alkali metals were treated with an acid such as hydrochloric acid. He called the liberated gas “fixed air” and found that it would react with lime water to form a white insoluble precipitate of chalk. This reaction became an invaluable marker for the presence of “fixed air.” Black subsequently found that “fixed air” was produced by burning charcoal and fermenting beer. In a remarkable experiment he showed that “fixed air” was given off by respiration. In a Scottish church where a large congregation gathered for religious devotions, he allowed lime water to drip over rags in the air ducts. After the service, which lasted about 10 hours, he found a precipitate of crystalline lime (CaCO3) in the rags, proof that “fixed air” was produced during the services. Black recognized that “fixed air” was the same gas described by von Helmont that would extinguish flame and life.1,4,14

In the early 1770s, Priestley and Scheele, working independently of each other, both produced and isolated “pure air.” Priestley used a 12-inch lens to heat mercuric oxide. The gas released in this process passed through the long neck of a flask and was isolated over mercury. This gas allowed a flame to burn brighter and a mouse to live longer than in ordinary air.1,4,15 Scheele also heated chemicals such as mercuric oxide and collected the gases in ox or hog bladders. Like Priestley, Scheele found that the gas isolated made a flame burn brighter. This gas was the “nitro-aereal spirit” described by Mayow. Priestley and Scheele described their observations to Antoine Lavoisier. He repeated Priestley’s experiments and found that if mercuric oxide was heated in the presence of charcoal, Black’s “fixed air” would be produced. Further work led Lavoisier to the conclusion that ordinary air must have at least two separate components. One part was respirable, combined with metals during heating, and supported combustion. The other part was nonrespirable. In 1779, Lavoisier called the respirable component of air “oxygen.” He also concluded from his experiments that “fixed air” was a combination of coal and the respirable portion of air. Lavoisier realized that oxygen was the explanation for combustion.1,4,17

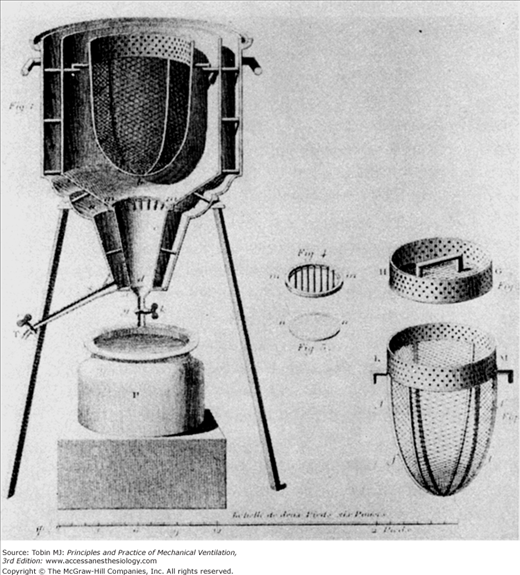

In the 1780s, Lavoisier performed a brilliant series of studies with the French mathematician Laplace on the use of oxygen by animals. Lavoisier knew that oxygen was essential for combustion and necessary for life. Furthermore, he was well aware of the Greek concept of internal heat presumably produced by the left ventricle. The obvious question was whether animals used oxygen for some type of internal combustion. Would this internal combustion be similar to that readily perceived by the burning of coal? To answer this question, the two great scientists built an ice calorimeter (Fig. 1-3). This device could do two things. Because the melting ice consumed heat, the rate at which ice melted in the calorimeter could be used as a quantitative measure of heat production within the calorimeter. In addition, the consumption of oxygen could be measured. It then was a relatively simple task to put an animal inside the calorimeter and carefully measure heat production and oxygen consumption. As Lavoisier suspected, the amount of heat generated by the animal was similar to that produced by burning coal for the quantity of oxygen consumed.1,4

Figure 1-3

The ice calorimeter, designed by Lavoisier and Laplace, allowed these French scientists to measure the oxygen consumed by an animal and the heat produced by that same animal. With careful measurements, the internal combustion of animals was found to be similar, in terms of oxygen consumption and heat production, to open fires. (Used, with permission, from Perkins.1)

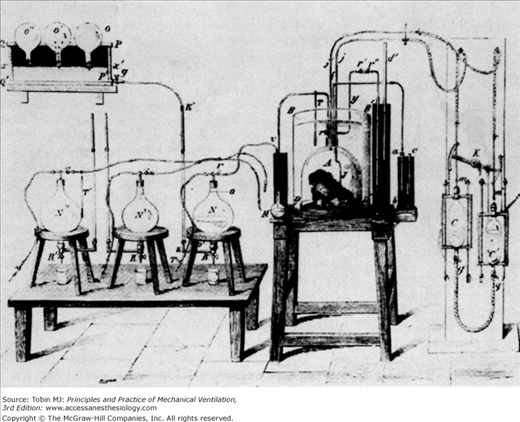

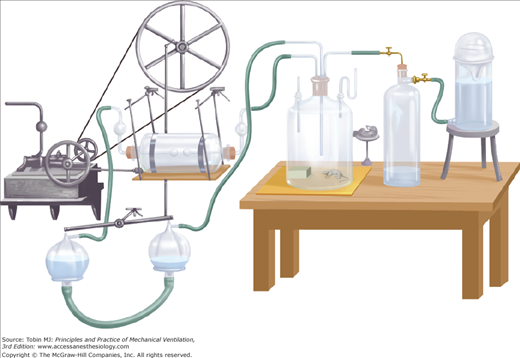

The Greeks suspected that the left ventricle produced innate heat, and Lavoisier himself may have thought that internal combustion occurred in the lungs.4 Spallanzani, though, took a variety of tissues from freshly killed animals and found that they took up oxygen and released carbon dioxide.1 Magnus, relying on improved methods of analyzing the gas content of blood, found higher oxygen levels in arterial blood than in venous blood but higher carbon dioxide levels in venous blood than in arterial blood. He believed that inhaled oxygen was absorbed into the blood, transported throughout the body, given off at the capillary level to the tissues, and there formed the basis for the formation of carbon dioxide.18 In 1849, Regnault and Reiset perfected a closed-circuit metabolic chamber with devices for circulating air, absorbing carbon dioxide, and periodically adding oxygen (Fig. 1-4). Pettenkofer built a closed-circuit metabolic chamber large enough for a man and a bicycle ergometer (Fig. 1-5).19 This device had a steam engine to pump air, gas meters to measure air volumes, and barium hydroxide to collect carbon dioxide. Although these devices were intended to examine the relationship between inhaled oxygen and exhaled carbon dioxide, they also could be viewed as among some of the earliest methods of controlled ventilation.

Figure 1-4

Regnault and Reiset developed a closed-circuit metabolic chamber in 1849 for studying oxygen consumption and carbon dioxide production in animals. (Used, with permission, from Perkins.1)

Figure 1-5

A. This huge device, constructed by Pettenkoffer, was large enough for a person. B. The actual chamber. The gas meters used to measure gas volumes are shown next to the chamber. The steam engine and gasometers for circulating air are labeled A. C. A close-up view of the gas-absorbing device adjacent to the gas meter in B. With this device Pettenkoffer and Voit studied the effect of diet on the respiratory quotient. (Used, with permission, from Perkins.1)

In separate experiments, the British scientist Lower and the Irish scientist Boyle provided the first evidence that uptake of gases in the lungs was related to gas content in the blood. In 1669, Lower placed a cork in the trachea of an animal and found that arterial blood took on a venous appearance. Removing the cork and ventilating the lungs with a bellows made the arterial blood bright red again. Lower felt that the blood must take in air during its course through the lungs and therefore owed its bright color entirely to an admixture of air. Moreover, after the air had in large measure left the blood again in the viscera, the venous blood became dark red.20 A year later Boyle showed with his vacuum pump that blood contained gas. Following Lavoisier’s studies, scientists knew that oxygen was the component of air essential for life and that carbon dioxide was the “fuliginous waste.”

About 1797, Davey measured the amount of oxygen and carbon dioxide extracted from blood by an air pump.4 Magnus, in 1837, built a mercurial blood pump for quantitative analysis of blood oxygen and carbon dioxide content.18 Blood was enclosed in a glass tube in continuity with a vacuum pump. Carbon dioxide extracted by means of the vacuum was quantified by the change in weight of carbon dioxide–absorbent caustic potash. Oxygen content was determined by detonating the gas in hydrogen.15 A limiting factor in Magnus’s work was the assumption that the quantity of oxygen and carbon dioxide in blood simply depended on absorption. Hence, the variables determining gas content in blood were presumed to be the absorption coefficients and partial pressures of the gases. In the 1860s, Meyer and Fernet showed that the gas content of blood was determined by more than just simple physical properties. Meyer found that the oxygen content of blood remained relatively stable despite large fluctuations in its partial pressure.21 Fernet showed that blood absorbed more oxygen than did saline solution at a given partial pressure.15

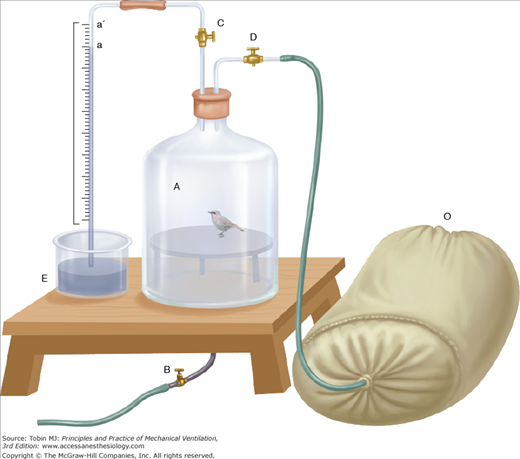

Paul Bert proposed that oxygen consumption could not strictly depend on the physical properties of oxygen dissolving under pressure in the blood. As an example, he posed the problem of a bird in flight changing altitude abruptly. Oxygen consumption could be maintained with the sudden changes in pressure only if chemical reactions contributed to the oxygen-carrying capacity of blood.15 In 1878, Bert described the curvilinear oxygen dissociation curves relating oxygen content of blood to its pressure. Hoppe-Seyler was instrumental in attributing the oxygen-carrying capacity of the blood to hemoglobin.22 Besides his extensive experiments with animals in either high- or low-pressure chambers, Bert also examined the effect of ventilation on blood gas levels. Using a bellows to artificially ventilate animals through a tracheostomy, he found that increasing ventilation would increase oxygen content in blood and decrease the carbon dioxide content. Decreasing ventilation had the opposite effect.15 Dohman, in Pflüger’s laboratory, showed that both carbon dioxide excess and lack of oxygen would stimulate ventilation.23 In 1885, Miescher-Rusch demonstrated that carbon dioxide excess was the more potent stimulus for ventilation.1 Haldane and Priestley, building on this work, made great strides in analyzing the chemical control of ventilation. They developed a device for sampling end-tidal, or alveolar exhaled, gas (Fig. 1-6). Even small changes in alveolar carbon dioxide fraction greatly increased minute ventilation, but hypoxia did not increase minute ventilation until the alveolar oxygen fraction fell to 12% to 13%.24

Figure 1-6

This relatively simple device enabled Haldane and Priestley to collect end-tidal expired air, which they felt approximated alveolar air. The subject exhaled through the mouthpiece at the right. At the end of expiration, the stopcock on the accessory collecting bag was opened, and a small aliquot of air was trapped in this device. (Used, with permission, from Best CH, Taylor NB, Physiological Basis of Medical Practice. Baltimore, MD: Williams & Wilkins; 1939:509.)

Early measurements of arterial oxygen and carbon dioxide tensions led to widely divergent results. In Ludwig’s laboratory the arterial partial pressure of oxygen was thought to be approximately 20 mm Hg. The partial pressure of carbon dioxide reportedly was much higher. These results could not entirely support the concept of passive gas movement between lung blood and tissues based on pressure gradients. Ludwig and others suspected that an active secretory process was involved in gas transport.4 Coincidentally, the French biologist Biot observed that some deep-water fish had extremely large swim bladders. The gas composition in those swim bladders seemed to be different than that of atmospheric air. Biot concluded that gas was actively secreted into these bladders.4,15,24,25 Pflüger and his coworkers developed the aerotonometer, a far more accurate device for measuring gas tensions than that used by Ludwig. When they obstructed a bronchus, they found no difference in the gas composition of air distal to the bronchial obstruction and that of pulmonary venous blood draining the area. They concluded that the lung did not rely on active processes for transporting oxygen and carbon dioxide; passive diffusion was a sufficient explanation.26

Although Pflüger’s findings were fairly convincing at the time, Bohr resurrected this controversy.27 He found greater variability in blood and air carbon dioxide and oxygen tensions than previously reported by Pflüger and suspected that under some circumstances secretion of gases might occur. In response, Krogh, a student of Bohr’s, developed an improved blood gas–measuring technique relying on the microaerotonometer (Fig. 1-7). With his wife, Krogh convincingly showed that alveolar air oxygen tension was higher than blood oxygen tension and vice versa for carbon dioxide tensions, even when the composition of inspired air was varied.28 Douglas and Haldane confirmed Krogh’s findings but wondered whether they were applicable only to people at rest. Perhaps during the stress of either exercise or high-altitude exposure, passive diffusion might not be sufficient. Indeed, the ability to secrete oxygen might explain the tolerance to high altitude developed by repeated or chronic exposures. Possibly carbon dioxide excretion might occur with increased carbon dioxide levels.26 In a classic series of experiments, Marie Krogh showed that diffusion increased with exercise secondary to the concomitant increase in cardiac output.29 Barcroft put to rest the diffusion-versus-secretion controversy with his “glass chamber” experiment. For 6 days he remained in a closed chamber subjected to hypoxia similar to that found on Pike’s Peak. Oxygen saturation of radial artery blood was always less than that of blood exposed to simultaneously obtained alveolar gas, even during exercise. These were expected findings for gas transport based simply on passive diffusion.30

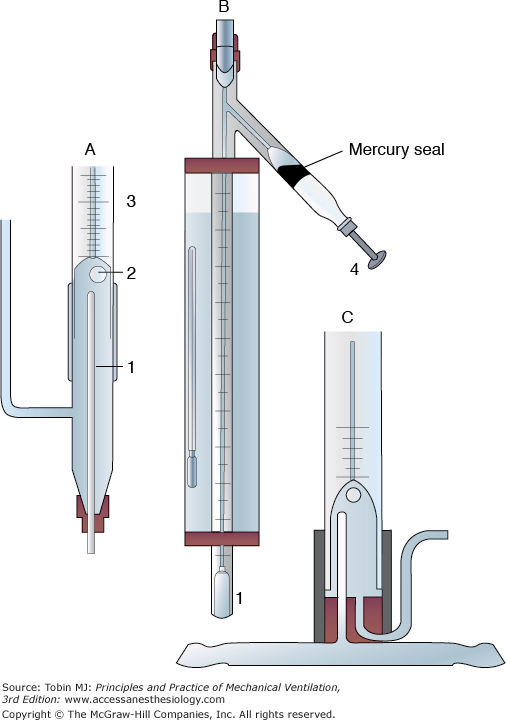

Figure 1-7

Krogh’s microaerotonometer. A. An enlarged view of the lower part of B. Through the bottom of the narrow tube (1) in A, blood is introduced. The blood leaves the upper end of the narrow tube (1) in a fine jet and plays on the air bubble (2). Once equilibrium is reached between the air bubble and blood, the air bubble is drawn by the screw plunger (4) into the graduated capillary tube shown in B. The volume of the air bubble is measured before and after treatment with KOH to absorb CO2 and potassium pyrogallate to absorb O2. The changes in volume of the bubble reflect blood CO2 and O2 content. C. A model of A designed for direct connection to a blood vessel. (Used, with permission, from Best CH, Taylor NB, Physiological Basis of Medical Practice. Baltimore, MD: Williams & Wilkins; 1939:521.)

With this body of work, the chemists and physiologists had provided the fundamental knowledge necessary for the development of mechanical ventilation. Oxygen was the component of atmospheric gas understood to be essential for life. Carbon dioxide was the “fuliginous waste” gas released from the lungs. The exchange of oxygen and carbon dioxide between air and blood was determined by the tensions of these gases and simple passive diffusion. Blood was a carrier of these two gases, as Harvey first suggested. Oxygen was carried in two ways, both dissolved in plasma and chemically combined with hemoglobin. Blood carried oxygen to the tissues, where oxygen was used in cellular metabolism, that is, the production of the body’s “innate heat.” Carbon dioxide was the waste product of this reaction. Oxygen and carbon dioxide tensions in the blood were related to ventilation in two critical ways. Increasing ventilation would secondarily increase oxygen tensions and decrease carbon dioxide levels. Decreasing ventilation would have the opposite effect. Because blood levels of oxygen and carbon dioxide could be measured, physiologists now could assess the adequacy of ventilation. Decreased oxygen tensions and increased carbon dioxide tensions played a critical role in the chemical control of ventilation.

It was not understood, though, how carbon dioxide was carried by the blood until experiments performed by Bohr31 and Haldane.32 The concept of blood acid–base activity was just beginning to be examined in the early 1900s. By the 1930s, a practical electrode became available for determining anaerobic blood pH,33 but pH was not thought to be useful clinically until the 1950s. In 1952, during the polio epidemic in Copenhagen, Ibsen suggested that hypoventilation, hypercapnia, and respiratory acidosis caused the high mortality rate in polio patients with respiratory paralysis. Clinicians disagreed because high blood levels of “bicarbonate” indicated an alkalosis. By measuring pH, Ibsen was proved correct, and clinicians became acutely aware of the importance of determining both carbon dioxide levels and pH.4 Numerous workers looked carefully at such factors as base excess, duration of hypercapnia, and renal buffering activity before Siggaard-Anderson published a pH/log PCO2 acid–base chart in 1971.34 This chart proved to be an invaluable basis for evaluating acute and chronic respiratory and metabolic acid–base disturbances. The development of practical blood gas machines suitable for use in clinical medicine did not occur until electrodes became available for measuring oxygen and carbon dioxide tensions in liquid solutions. Stow built the first electrode capable of measuring blood PCO2. As the basis for this device, he used a glass pH electrode with a coaxial central calomel electrode opening at its tip. A unique adaptation, however, was the use of a rubber finger cot to wrap the electrode. This wrap trapped a film of distilled water over the electrode. The finger cot then acted as a semipermeable membrane to separate the measuring electrode from the sample.35 Clark used a similar idea in the development of an oxygen measuring device. Platinum electrodes were used as the measuring device, and polyethylene served as the semipermeable membrane.36 By 1973, Radiometer was able to commercially produce the first automated blood gas analyzer, the ABL, capable of measuring PO2, PCO2, and pH in blood.4

Explorers and Working Men of Submarines and Balloons

Travel in the deep sea and flight have intrigued humankind for centuries. Achieving these goals has followed a typical pattern. First, individual explorers tested the limits of human endurance. As mechanical devices were developed to extend those limits, the deep sea and the air became accessible to commercial and military exploration. These forces further intensified the need for safe and efficient underwater and high-altitude travel. Unfortunately, the development of vehicles to carry humans aloft and under water proceeded faster than the appreciation of the physiologic risks. Calamitous events ensued, with serious injury and death often a consequence. Only a clear understanding of the ventilatory problems associated with flight and deep-sea travel has enabled human beings to reach outer space and the depths of the ocean floor.

Diving bells undoubtedly were derived from ancient humans’ inverting a clay pot over their heads and breathing the trapped air while under water. These devices were used in various forms by Alexander the Great at the siege of Tyre in 332 BC, the Romans in numerous naval battles, and pirates in the Black Sea.37,38 In the 1500s, Sturmius constructed a heavy bell that, even though full of air, sank of its own weight. When the bell was positioned at the bottom of fairly shallow bodies of water, workers were able to enter and work within the protected area. Unfortunately, these bells had to be raised periodically to the surface to refresh the air. Although the nature of the foul air was not understood, an important principle of underwater work, the absolute need for adequate ventilation, was appreciated.15

Halley devised the first modern version of the diving bell in 1690 (Fig. 1-8). To drive out the air accumulated in the bell and “made foul” by the workers’ respiration, small barrels of air were let down periodically from the surface and opened within the bell. Old air was released through the top of the bell by a valve. In 1691, Papin developed a technique for constantly injecting fresh air from the surface directly into the bell by means of a strong leather bellows. In 1788, Smeaton replaced the bellows with a pump for supplying fresh air to the submerged bell.15,37,38

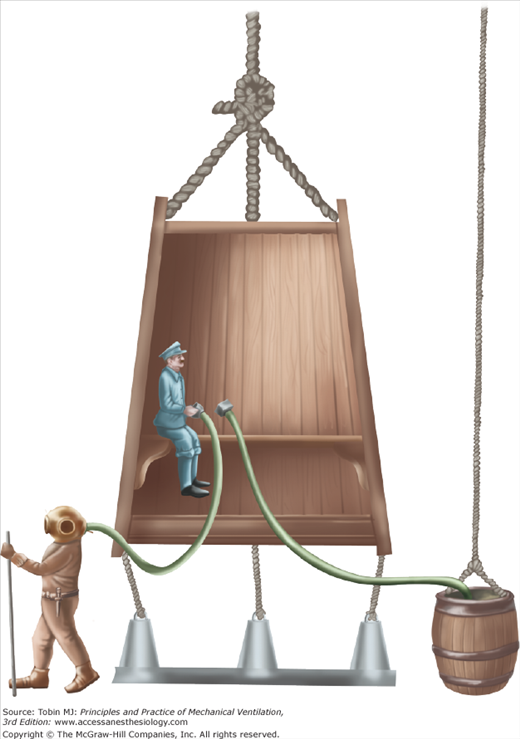

Figure 1-8

Halley’s version of the diving bell. Small barrels of fresh air were lowered periodically to the bell, and the worker inside the bell released the air. “Foul air” often was released by way of a valve at the top of the bell. Workers could exit the bell for short periods. (Used, with permission, from Hill.38)

Techniques used to make diving bells practical also were applied to divers. Xerxes used them to recover sunken treasure.39 Sponge divers in the Mediterranean in the 1860s could stay submerged for 2 to 4 minutes and reach depths of 45 to 55 m.40 Amas, female Japanese divers using only goggles and a weight to facilitate rapid descent, made dives to similar depths.41 Despite the remarkable adaptations of breath-holding measures developed by these naked divers,42 the commercial and military use of naked divers was limited. In 77 AD, Pliny described divers breathing through tubes while submerged and engaged in warfare. More sophisticated diving suits with breathing tubes were described by Leonardo da Vinci in 1500 and Renatus in 1511. Although these breathing tubes prolonged underwater activities, they did not enable divers to reach even moderate depths.15 Borelli described a complete diving dress with tubes in the helmet for recirculating and purifying air in 1680 (Fig. 1-9).37

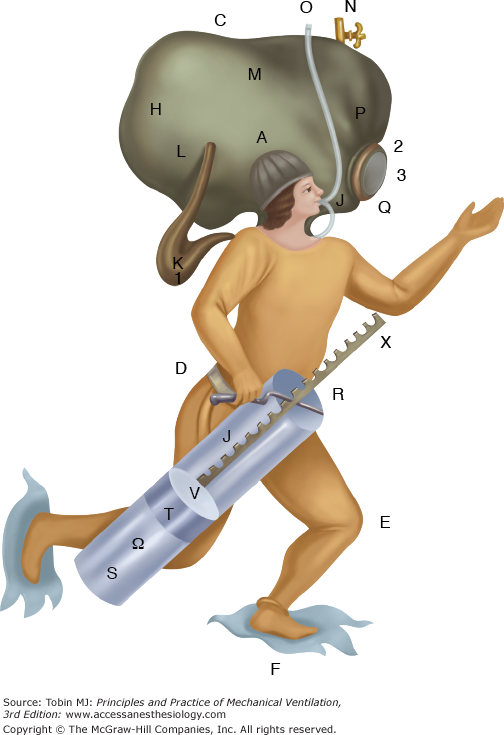

Figure 1-9

A fanciful diving suit designed by Borelli in 1680. (Used, with permission, from Hill.38)

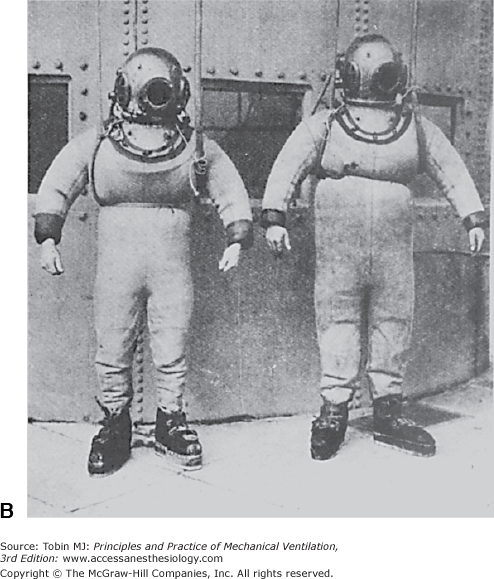

Klingert described the first modern diving suit in 1797.37 It consisted of a large helmet connected by twin breathing pipes to an air reservoir that was large enough to have an associated platform. The diver stood on the platform and inhaled from the air reservoir through an intake pipe on the top of the reservoir and exhaled through a tube connected to the bottom of the reservoir. Siebe made the first commercially viable diving dress. The diver wore a metal helmet riveted to a flexible waterproof jacket. This jacket extended to the diver’s waist but was not sealed. Air under pressure was pumped from the surface into the diver’s helmet and escaped through the lower end of the jacket. In 1837, Siebe modified this diving dress by extending the jacket to cover the whole body. The suit was watertight at the wrists and ankles. Air under pressure entered the suit through a one-way valve at the back of the helmet and was released from the suit by an adjustable valve at the side of the helmet (Fig. 1-10).37 In 1866, Denayouze incorporated a metal air reservoir on the back of the diver’s suit. Air was pumped directly into the reservoir, and escape of air from the suit was adjusted by the diver.15

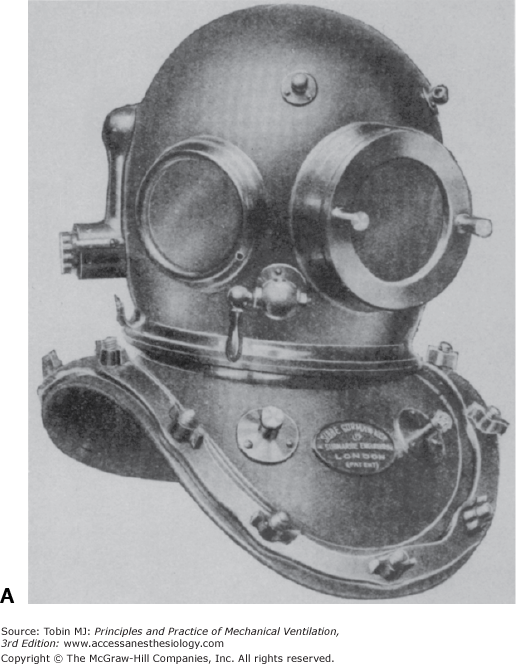

Figure 1-10

A. The metal helmet devised by Siebe is still used today. B. The complete diving suit produced by Siebe, Gorman, and Company in the nineteenth century included the metal helmet, a diving dress sealed at the wrists and ankles, and weighted shoes. (Used, with permission, from Hill.38)

Siebe, Gorman, and Company produced the first practical self-contained diving dress in 1878. This suit had a copper chamber containing potash for absorbing carbon dioxide and a cylinder of oxygen under pressure.37 Fleuss cleverly revised this diving suit in 1879 to include an oronasal mask with an inlet and an exhaust valve. The inlet valve allowed inspiration from a metal chamber containing oxygen under pressure. Expiration through the exhaust valve was directed into metal chambers under a breastplate that contained carbon dioxide absorbents. Construction of this appliance was so precise that Fleuss used it not only to stay under water for hours but also to enter chambers containing noxious gases. The Fleuss appliance was adapted rapidly and successfully to mine rescue work, where explosions and toxic gases previously had prevented such efforts.43

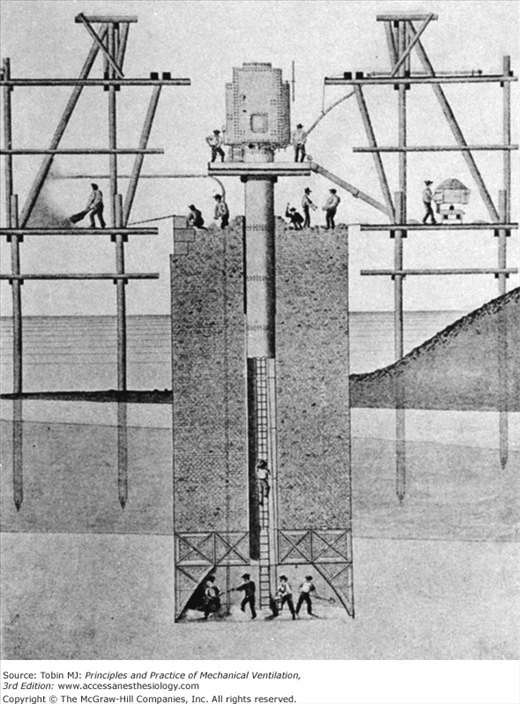

As Siebe, Gorman, and Company successfully marketed diving suits, commercial divers began to dive deeper and longer. Unfortunately, complications developed for two separate reasons. Decompression illness was recognized first. In 1830, Lord Cochrane took out a patent in England for “an apparatus for compressing atmospheric air within the interior capacity of subterraneous excavations [to]… counteract the tendency of superincumbent water to flow by gravitation into such excavations…and which apparatus at the same time is adapted to allowing workmen to carry out their ordinary operations of excavating, sinking, and mining.”38 In 1841, Triger described the first practically applied caisson for penetrating the quicksands of the Loire River (Fig. 1-11).44 This caisson, or hollow iron tube, was sunk to a depth of 20 m. The air within the caisson was compressed by a pump at the surface. The high air pressure within the caisson was sufficient to keep water out of the tube and allow workers to excavate the bottom. Once the excavation reached the prescribed depth, the caisson was filled with cement, providing a firm foundation. During the excavation process, workers entered and exited the caisson through an airlock. During this work, Triger described the first cases of “caisson disease,” or decompression illness, in workers after they had left the pressurized caisson. As this new technology was applied increasingly in shaft and tunnel work (e.g., the Douchy mines in France in 1846; bridges across the Midway and Tamar rivers in England in 1851 and 1855, respectively; and the Brooklyn Bridge, constructed between 1870 and 1873), caisson disease was recognized more frequently. Bert was especially instrumental in pointing out the dangers of high pressure.15 Denayouze supervised many commercial divers and probably was among the first to recognize that decompression caused illness in these divers.15 In the early 1900s, Haldane developed safe and acceptable techniques for staged decompression based on physiologic principles.38

Figure 1-11

The caisson is a complex device enabling workers to function in dry conditions under shallow bodies of water or in other potentially flooded circumstances. A tube composed of concentric rings opens at the bottom to a widened chamber, where workers can be seen. At the top of the tube is a blowing chamber for maintaining air pressure and dry conditions within the tube. Workers enter at the top through an air lock and gain access to the working area via a ladder through the middle of the tube. (Used, with permission, from Hill.38)

Haldane also played a critical role in examining how well Siebe’s closed diving suit supplied the ventilation needs of divers. This work may have been prompted by Bert’s studies with animals placed in high-pressure chambers. Bert found that death invariably occurred when inspired carbon dioxide levels reached a certain threshold. Carbon dioxide absorbents placed in the high-pressure chamber prevented deaths.15 Haldane’s studies in this area were encouraged by a British Admiralty committee studying the risks of deep diving in 1906. Haldane understood that minute ventilation varied directly with alveolar carbon dioxide levels. It appeared reasonable that the same minute ventilation needed to maintain an appropriate PACO2 at sea level would be needed to maintain a similar PACO2 under water. What was not appreciated initially was that as the diver descended and pressure increased, pump ventilation at the surface necessarily also would have to increase to maintain minute ventilation. Haldane realized that at 2 atmospheres of pressure, or 33 ft under water, pump ventilation would have to double to ensure appropriate ventilation. This does not take into account muscular effort, which would further increase ventilatory demands. Unfortunately, early divers did not appreciate the need to adjust ventilation to the diving suit. Furthermore, air pumps often leaked or were maintained inadequately. Haldane demonstrated the relationship between divers’ symptoms and hypercapnia by collecting exhaled gas from divers at various depths. The fraction of carbon dioxide in the divers’ helmets ranged from 0.0018 to 0.10 atm.26,38 The investigations of Bert and Haldane finally clarified the nature of “foul air” in diving bells and suits and the role of adequate ventilation in protecting underwater workers from hypercapnia. Besides his work with divers, Haldane also demonstrated that the “black damp” found in mines actually was a dangerously toxic blend of 10% CO2 and 1.45% O2.45 He developed a self-contained rescue apparatus for use in mine accidents that apparently was more successful than the Fleuss appliance.46

Diving boats were fancifully described by Marsenius, in 1638, and others. Only the boat designed by Debrell in 1648 appeared plausible because “besides the mechanical contrivances of his boat, he had a chemical liquor, the fumes of which, when the vessel containing it was unstopped, would speedily restore to the air, fouled by the respiration, such a portion of vital spirits as would make it again fit for that office.” Although the liquor was never identified, it undoubtedly was an alkali for absorbing carbon dioxide.38 Payerne built a submarine for underwater excavation in 1844. Since 1850, the modern submarine has been developed primarily for military actions at sea.

Submarines are an intriguing physiologic experiment in simultaneously ventilating many subjects. Ventilation in submarines is complex because it involves not only oxygen and carbon dioxide levels but also heat, humidity, and body odors. Early work in submarines documented substantial increases in temperature, humidity, and carbon dioxide levels.47 Mechanical devices for absorption of carbon dioxide and air renewal were developed quickly,48 and by 1928, Du Bois thought that submarines could remain submerged safely for up to 96 hours.49 With the available carbon dioxide absorbents, such as caustic soda, caustic potash, and soda lime, carbon dioxide levels could be kept within relatively safe levels of less than 3%. Supplemental oxygen carried by the submarine could maintain a preferred fractional inspired oxygen concentration above 17%.39,50–52

In 1782, the Montgolfier brothers astounded the world by constructing a linen balloon about 18 m in diameter, filling it with hot air, and letting it rise about 2000 m into the air. On November 21, 1783, two Frenchmen, de Rozier and the Marquis d’Arlandes, were the first humans to fly in a Montgolfier balloon.53 Within a few years, Jeffreys and Blanchard had crossed the English Channel in a balloon, and Charles had reached the astonishing height of 13,000 ft in a hydrogen-filled balloon. As with diving, however, the machines that carried them aloft brought human passengers past the limits of their physiologic endurance. Glaisher and Coxwell reached possibly 29,000 ft in 1862, but suffered temporary paralysis and loss of consciousness.4,26,54

Acoste’s description in 1573 of vomiting, disequilibrium, fatigue, and distressing grief as he traversed the Escaleras (Stairs) de Pariacaca, between Cuzco and Lima, Peru (“one of the highest places in the universe”), was widely known in Europe.55 In 1804, von Humboldt attributed these high altitude symptoms to a lack of oxygen. Surprisingly, however, he found that the fraction of inspired oxygen in high-altitude air was similar to that found in sea-level air. He actually suggested that respiratory air might be used to prevent mountain sickness.56 Longet expanded on this idea in 1857 by suggesting that the blood of high-altitude dwellers should have a lower oxygen content than that of sea-level natives. In a remarkable series of observations during the 1860s, Coindet described respiratory patterns of French people living at high altitude in Mexico City. Compared with sea-level values, respirations were deeper and more frequent, and the quantity of air expired in 1 minute was somewhat increased. He felt that “this is logical since the air of altitudes contains in a given volume less oxygen at a lower barometric pressure…[and therefore] a greater quantity of this air must be absorbed to compensate for the difference.”15 Although these conclusions might seem reasonable now, physiologists of the time also considered decreased air elasticity, wind currents, exhalations from harmful plants, expansion of intestinal gas, and lack of support in blood vessels as other possible explanations for the breathing problems experienced at high altitude. Bert, the father of aviation medicine, was instrumental in clarifying the interrelationship among barometric pressure, oxygen tension, and symptoms. In experiments on animals exposed to low-pressure conditions in chambers (Fig. 1-12), carbonic acid levels increased within the chamber, but carbon dioxide absorbents did not prevent death. Supplemental oxygen, however, protected animals from dying under simulated high-altitude conditions (Fig. 1-13). More importantly, he recognized that death occurred as a result of the interaction of both the fraction of inspired oxygen and barometric pressure. When a multiple of these two variables—that is, the partial pressure of oxygen—reached a critical threshold, death ensued.15,39

Figure 1-12

A typical device used by Bert to study animals under low-pressure conditions. (Used, with permission, from Bert.15)

Figure 1-13

A bird placed in a low-pressure bell jar can supplement the enclosed atmospheric air with oxygen inspired from the bag labeled O. Supplemental oxygen prolonged survival in these experiments. (Used, with permission, from Bert.15)

Croce-Spinelli, Sivel, and Tissandier were adventurous French balloonists eager to reach the record height of 8000 m. At Bert’s urging, they experimented with the use of oxygen tanks in preliminary balloon flights and even in Bert’s decompression chamber. In 1875, they began their historic attempt to set an altitude record supplied with oxygen cylinders (Fig. 1-14). Unfortunately, at 24,600 ft they released too much ballast, and their balloon ascended so rapidly that they were stricken unconscious before they could use the oxygen. When the balloon eventually returned to earth, only Tissandier remained alive.4,54 This tragedy shook France. The idea that two men had died in the air was especially disquieting.53 Unfortunately, the reasons for the deaths of Croce-Spinelli and Sivel were not clearly attributed to hypoxia. Von Schrotter, an Austrian physiologist, believed Bert’s position regarding oxygen deficit as the lethal threat and encouraged Berson to attempt further high-altitude balloon flights. He originally devised a system for supplying oxygen from a steel cylinder with tubing leading to the balloonists. Later, von Schrotter conceived the idea of a face mask to supply oxygen more easily and also began to use liquid oxygen. With these devices, Berson reached 36,000 ft in 1901.4,54

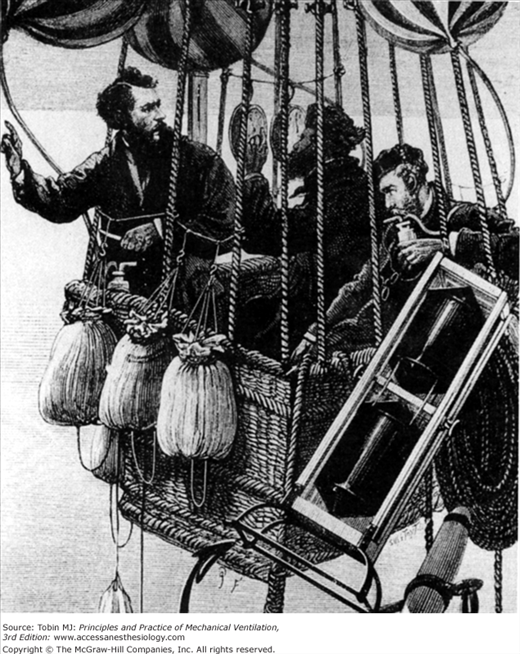

Figure 1-14

The adventurous French balloonists Croce-Spinelli, Sivel, and Tissandier begin their attempt at a record ascent. The balloonist at the right can be seen inhaling from an oxygen tank. Unfortunately, the supplemental oxygen did not prevent tragic results from a too rapid ascent. (Used, with permission, from Armstrong HG. Principles and Practice of Aviation Medicine. Baltimore, MD: Williams & Wilkins; 1939:4.)

The Wright brothers’ historic flight at Kitty Hawk in 1903 substantially changed the nature of flight. The military value of airplanes soon was appreciated and applied during World War I. The Germans were especially interested in increasing the altitude limits for their pilots. They applied the concepts advocated by von Schrotter and provided liquid oxygen supplies for high-altitude bombing flights. Interest in airplane flights for commercial and military uses was especially high following Lindbergh’s solo flight across the Atlantic in 1927. Much work was done on valves and oxygen gas regulators in the hope of further improving altitude tolerance. A series of high-altitude airplane flights using simple face masks and supplemental oxygen culminated in Donati’s reaching an altitude of 47,358 ft in 1934. This was clearly the limit for human endurance using this technology.4,54

Somewhat before Donati’s record, a breakthrough in flight was achieved by Piccard, who enclosed an aeronaut in a spherical metal chamber sealed with an ambient barometric pressure equivalent to that of sea level. The aeronaut easily exceeded Donati’s record and reached 55,000 ft. This work recapitulated the important physiologic concept, gained from Bert’s earlier experimental work in high-altitude chambers, that oxygen availability is a function of both fractional inspired oxygen and barometric pressure. Piccard’s work stimulated two separate investigators to adapt pressurized diving suits for high-altitude flying. In 1933, Post devised a rubberized, hermetically sealed silk suit. In the same year, Ridge worked with Siebe, Gorman, and Company to modify a self-contained diving dress for flight. This suit provided oxygen under pressure and an air circulator with a soda lime canister for carbon dioxide removal. These suits proved quite successful, and soon pilots were exceeding heights of 50,000 ft. Parallel work with sealed gondolas attached to huge balloons led to ascents higher than 70,000 ft. In 1938, Lockheed produced the XC-35, which was the first successful airplane with a pressurized cabin (Fig. 1-15).4,54 These advances were applied quickly to military aviation in World War II. The German Air Ministry was particularly interested in developing oxygen regulators and valves and positive-pressure face masks for facilitating high-altitude flying.57

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree