Fig. 51.1

Carl Koller (1857–1944) is recognized as the discoverer of the usefulness of cocaine for local anesthesia. (Courtesy of the National Library of Medicine, Bethesda, MD)

While Koller is recognized as the “discoverer of local anesthesia”, this title may be no more valid than recognizing Christopher Columbus as the discoverer of America. Other investigators had noted the local anesthetic properties of cocaine and its potential uses before Koller’s experiments and clinical application. Nonetheless, Koller was responsible for delivering cocaine as a local anesthetic into clinical practice. Perhaps a more appropriate title might be “discoverer of clinical local anesthesia”. As with the ether story, a surprisingly long time extended from the recognition of cocaine’s potential as an anesthetic, to the translational event bringing it into clinical use.

Although the first verified use of the coca plant (Erythroxylum coca) around 500 CE was established by its presence in an ancient Peruvian gravesite [3], its stimulant properties were recognized more that two millennia earlier. The Incas considered the plant to be divine, referring to the plant as khoka, or “the plant”, translating to the term coca by Europeans [4]. It was used in religious ceremonies, and described as “that heavenly plant which satisfies the hungry, strengthens the weak, and makes men forget their misfortunes” [5]. Some early European explorers shared such sentiments. For example, Paolo Mantegazza (1831–1910), was an Italian pathologist, anthropologist, writer, and member of Parliament who worked in Argentina from 1854 to 1858. He had extensive experience with coca, and published a monograph on his return to Italy “On the hygienic and medicinal virtues of coca”. His descriptions are astounding:

“….some of the images I tried to describe in the first part of my delerium were full of poetry. I sneered at mere mortals condemned to live in this valley of tears while I, carried on the wind of two leaves of coca, went flying through the spaces of 77,438 worlds, each more splendid than the one before. An hour later, I was sufficiently calm to write these words in a steady hand ‘God is unjust because he made man incapable of sustaining the effect of coca all life long. I would rather have a life span of ten years with coca than one of 1000000….(and here I have inserted a line of zeros) centuries without coca’.”

The German chemist Friedrich Gardeke (1828–1890) was first to extract the active ingredient of the coca plant, doing so in 1855. He named it erythroxyline [1]. Shortly thereafter, Carl von Scherzer (1821–1903), returning from an expedition on the frigate Novarra, delivered an abundant supply of the plant to the chemist, Friedrich Woehler (1800–1882), who asked his assistant, Albert Niemann (1880–1921) to extract the alkaloid [6]. Niemann or perhaps Woehler named the product cocaine, a name with greater appeal than erythroxyline. Niemann also chewed some of the isolated compound and reported, “It numbs the tongue, and takes away both feeling and taste” [7]. In 1862, Karl von Schroff (1802–1887) reported to the Viennese Medical Society, describing the numbness cocaine produced when applied to the tongue. However, no Viennese then applied cocaine for the relief of surgical pain.

In 1868, T Moreno y Maiz [8], later the Physician-in-Chief of the Peruvian Army, reported the first systematic investigations of the anesthetic properties of cocaine. In frogs he noted that,

“Notwithstanding that the spinal cord remains intact, we nevertheless observe disappearance of sensibility in the injected limb. It is therefore on peripheral sensibility that cocaine acetate appears to act. Furthermore the local action of the substance is very evident. Could it be used as a local anesthetic? One cannot reply on the basis of so few experiments; it is the future that will decide.” [8]

These animal experiments also described cocaine’s capacity to produce generalized irritability and convulsions, and self-experimentation confirmed the induction of a feeling of well-being, but Moreno y Maiz did not apply cocaine for the relief of surgical pain. Missed the gold ring, he did.

In 1880, an Estonian, B. von Anrep [9] (1852–1927) described the potential of cocaine to produce anesthesia, but failed to recognize the importance of this observation:

“After studying the physiological actions of cocaine in animals I had intended to investigate it also in man. Various other activities have so far prevented me from doing so. Although the experiments on animals have not had any practical consequences, I would recommend that cocaine be tested as a local anesthetic.” [9]

Von Anrep did not take his own advice, with respect to surgical anesthesia although he did use it for the treatment of pain.

Koller’s interest in cocaine came by way of Sigmund Freud (1856–1939), a senior colleague and friend. Freud was interested in cocaine taken orally as a possible therapeutic for various conditions including fatigue, dyspepsia, hysteria, and headaches. He also advocated its use for management of morphine addiction, prescribing it for his colleague, the notable pathologist Ernst Fleischl von Marxow (1846–1891). Von Marxow had become addicted to morphine used to treat neuropathic pain after a thumb amputation [2]. Although cocaine addiction substituted for morphine addiction, it also resulted in Von Marxow’s untimely death.

While primarily interested in cocaine’s systemic effects, Freud alluded to its anesthetic properties in his 1884 publicationUber Cocain, commenting “Indeed, the anesthetizing properties of cocaine should make it suitable for a good many further applications”. He encouraged two colleagues, Leopold Konigstein and Koller, to investigate cocaine’s use in ophthalmological surgery, but the former’s interests focused on the treatment of pathological conditions. Freud’s role in prompting Koller’s investigations of cocaine are not entirely clear, but what is clear is Freud’s comment, that the discovery of topical surgical anesthesia belongs to Koller [10].

A colleague of Koller’s remarked on cocaine’s numbing effect on the tongue [1]. Koller confirmed the resulting lack of sensitivity with self-experimentation, commenting, “At that moment it flashed upon me that I was carrying in my pocket the local anesthetic for which I had searched some years earlier. I went straight to the laboratory… and instilled this into the eye of the animal.” He performed the first surgical procedure under cocaine anesthesia on a patient with glaucoma on 11 September 1884, four days before the historic Heidelberg demonstration.

It is difficult to understand the inordinate time passing between the recognition of cocaine’s anesthetic properties and its clinical application. Perhaps Winston Churchill summarized it: “Men occasionally stumble over the truth, but most will pick themselves up, and hurry along as if nothing happened”.

Although Koller won fame for his introduction of one of the most important contributions to medicine, he never obtained a much-desired assistantship in the University of Vienna Eye Clinic. In addition to being Jewish (hardly an asset during that period in Austria), his daughter described him as “a tempestuous young man, and one who could never be compelled to speak diplomatically even for his own good ” [1]. Any hope for an appointment evaporated with an incident that put his career in jeopardy. A disagreement over patient management caused another physician to call him either a “Jewish swine” or an “Impudent Jew.” Koller hit the offender in the face. The two were reserve officers in the Austrian army and settled the matter with a sabre duel’ending after three thrusts, with Koller inflicting wounds on his opponent’s head and upper arm. Koller was not wounded, at least physically, but was charged with criminal offences, which were later withdrawn [1]. Koller emigrated to New York, where he maintained a successful practice and died in 1944. He was awarded the American Ophthalmological Society’s first Gold medal in 1921.

Cocaine’s ability to induce insensibility was soon used for other clinical applications, including topical anesthesia in diverse areas (e.g., mucosal surfaces of the nose, throat, and urethra). Techniques for spinal anesthesia and peripheral nerve blocks were also developed, the former championed by J. Leonard Corning (1855–1923) and August Bier (1861–1949), and the latter by William Halsted (1852–1922) and Richard Hall (1856–1897).

The Emergence of Toxicity

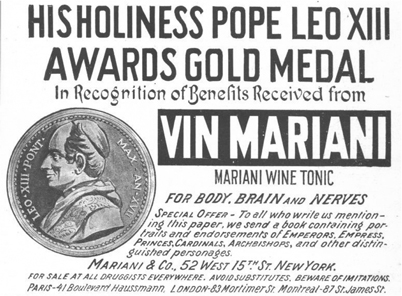

At its introduction into clinical medicine, cocaine was thought to be remarkably safe, indeed, beneficial to health. It enjoyed widespread popularity outside mainstream medicine, notably in Vin Mariani (Fig. 51.2), a popular French tonic composed of Bordeaux wine infused with coca leaves. Such notables as Thomas Edison, Jules Verne, Rodin, HG Wells, and Pope Leo XIII (Fig. 51.3) consumed and endorsed the drink. Robert Louis Stevenson is supposed to have written “Dr Jekyll and Mr Hyde” under the influence of cocaine. It inspired John Pemberton’s Coca Wine in Atlanta, Georgia. And after the introduction of prohibition in Atlanta and Fullerton County in 1886, a non-alcoholic version of the drink premiered as Coca Cola. Coca Cola continued to contain cocaine until 1903.

Fig. 51.2

Vin Mariani “fortified and refreshed body & brain” with its wine and cocaine content. (A lithograph created by Jules Cheret, printed in 1894)

Fig. 51.3

Pope Leo XIII recognized the benefits received from Vin Mariani. (From an advertisement that was printed in 1899)

Many physicians also initially believed cocaine was relatively innocuous. As late as 1886, one practitioner reported to the New York Neurological Society that “I do not believe that any dose could be taken that would be dangerous” [11]. However, increasing reports of central nervous system and cardiac toxicity accompanied its expanding use. In 1887, speaking before the Kings County Medical Society, Jansen Mattison (1845-?) related 50 cases of toxicity, including 4 fatal cases. By 1891, this number had increased to 126 [11]. The significance of these complications can be appreciated by Mattison’s comment that “the risk of untoward results have robbed this peerless drug of much favor in the minds of many surgeons, and so deprived them of a most valued ally.” Mattison dismissed the often-held belief that toxicity was due to impurities: “It has a killing power, per se, and the purer the product, the more decided this may be.”

The Search for Less Toxic Local Anesthetics Begins

Toxicity and emerging issues of addiction stimulated the search for alternative topical-local anesthetics. Early investigations of natural products identified tropocaine, an alkaloid isolated from a different variety of coca, but this compound had no therapeutic advantage over cocaine [4]. Synthetic chemistry proved more fruitful. Mimicking the chemical structure of cocaine, synthetic strategies focused on benzoic acid esters. In 1890, Eduard Ritsert created benzocaine. However, its poor water solubility made it suitable only for topical anesthesia, an application sunburn sufferers still find useful. Similar synthetic efforts by Alfred Einhorn (1856–1917) and colleagues (in the employ of the Bayer Company, originally a dye works founded by Friedrich Bayer and Johann Weskott in 18631) resulted in procaine, one of 18 related compounds patented in 1904 [7].

Shortly after its introduction, Heinrich Braun documented the effectiveness and reduced toxicity of procaine relative to cocaine [12]. Procaine however, lacked cocaine’s vasoconstrictive properties (which slow its removal into the bloodstream), and Braun made prescient comments concerning toxicity and the potential utility of epinephrine:

“The new drug by itself cannot substitute for cocaine. To obtain results similar to those with cocaine one would have to increase the concentration and dose so much that the lower toxicity would be rendered illusionary. Fortunately, this drawback can be readily overcome with the addition of epinephrine.” [12]

Although procaine had a greater therapeutic index than cocaine, this advantage did not eliminate concerns for toxicity. In response, the American Medical Association established the Committee for the Study of Toxic Effects of Local Anesthetics, under the leadership of Emil Mayer. The first publication of the committee described 43 deaths associated with the use of local anesthetics. The anesthetic was judged to have caused all but 3 deaths, including 2 that clinicians had attributed to “status lymphaticus” [13].

Although seizures (convulsions) or respiratory failure were usually the first or sole manifestation of toxicity, in some cases cardiac arrest occurred before apnea, and without convulsions. Medical errors (administration of the wrong drug or of an overdose) caused several deaths. Frequently, incorrect drug administration followed misinterpretation of verbal orders. Excessive doses of anesthetic were also administered due to lack of labeling of anesthetic solutions. The resulting recommendations for preventing such incidents (e.g., solutions should be uniquely identifiable with respect to drug and concentration, verbal orders should be minimized) paralleled modern National Patient Safety Goals. (The more things change the more they remain the same.) The committee’s review appeared to confirm cocaine’s greater toxicity: although it was used less often than procaine, cocaine was associated with the majority of cases. The committee did not recommend abandoning the use of cocaine, but its recommendations effectively eliminated widespread use of cocaine as an injectable anesthetic.

The report by Mayer’s committee did much to change practice and reduce the toxic manifestations of local anesthetics. However, procaine’s short duration of action limited its clinical utility. This set the stage for the introduction of tetracaine, a compound synthesized in 1928 by Eisleb, and introduced into clinical practice four years later [14]. While tetracaine represented a step forward (it had a longer duration of effect than procaine), it was toxic if used in a high volume for peripheral blocks. As with procaine, its ester linkage rendered it somewhat unstable and thus not amenable to repeated sterilization by autoclaving. These characteristics ultimately led to more limited use, primarily as a spinal anesthetic, for which it still finds some application. A similar fate befell dibucaine, a compound synthesized by Meischer and introduced into clinical practice by Uhlmann.

The Woolley and Roe Case: A Major Setback for Local Anesthesia

Spinal anesthesia served as the spearhead for local anesthetic development. Sporadic reports of neurological sequelae may have tempered enthusiasm but did little to impact the use of this invaluable technique. However, the tragic occurrence of paraplegia in two patients (Albert Woolley and Cecil Roe) on a single day in 1947 at Chesterfield Royal Hospital culminated in a landmark legal case, which would profoundly impact spinal anesthesia, not just in the UK, but also throughout the world. The court ruled in the physician’s favor, believing the weight of evidence overwhelming supported contamination of the anesthetic as the etiology of injury [15]. This was thought to occur from phenol used for sterilization penetrating through invisible cracks in the anesthetic ampoules. Although others have challenged this theory, alternative explanations have largely focused on contamination of the equipment used to deliver the local anesthetic rather than the anesthetic solution, again exonerating the local anesthetic [15]. Most critically, more modern techniques of ensuring sterility eliminated such risk, enabling spinal anesthesia to be conducted with impressive safely in modern practice.

Introduction of Amide Anesthetics

The commercial release of lidocaine in 1948 heralded the modern local anesthetics. Unlike procaine and tetracaine which had ester linkages, lidocaine had an amide linkage, and consequently a greater stability and longer shelf life. And unlike procaine, it did not break down to p-aminobenzoic acid, a compound believed responsible for allergic reactions. Lidocaine was not the first amide anesthetic; Niraquine, a compound abandoned because of its local irritant properties was introduced in 1898 [4].

The development of lidocaine emerged from studies of mutant barley [16,17]. Synthesis of analogs of alkaloid isolated from these plants yielded LL30, named for the two chemists responsible for the synthesis and discovery of local anesthetic properties, Nils Löfgren (1913–1967) and Bengt Lundqvist. Lundqvist was a young chemistry student, who came to Löfgren’s laboratory after the latter had synthesized LL30. Lundqvist sought something that he could experiment with, and Löfgren gave him some of the LL30. With little research money available, and no laboratory animals, Lundqvist borrowed some texts on local anesthesia from a medical student friend, and took the LL30 home. There he supposedly performed digital and spinal blocks on himself! How he would perform a spinal block on himself is unclear’it is said that he used a mirror; perhaps he had help! He then told Löfgren that LL30 was the best local anesthetic substance that existed. Also interesting is the opportunity that Pharmacia passed up by not buying the patent when it was offered to them! LL30 was named Xylocaine, or lignocaine or lidocaine.

Lofgren was a dedicated researcher, brilliant lecturer, and gifted musician. He became wealthy as a result of his discovery of lidocaine, but was increasingly frustrated with academic bureaucracy. During an episode of depression, he took his life in 1967. Lundqvist was a champion fencer, and keen sailor. At the age of 30, he suffered a head injury from which he died. His family used the royalties from his discovery to establish a fund to pay for the studies of many young and promising chemistry students [18].

In 1943, Astra Pharmaceuticals acquired the rights to lidocaine. Torsten Gordh Sr. (1907–2010) commented (personal communication): “Before lidocaine, Astra was a small local drug firm. Lidocaine was the start of ASTRA as a world wide company.” The initial animal experiments performed by Leonard Goldberg, and the clinical studies undertaken by Gordh in 1945, showed that lidocaine had a greater therapeutic index than procaine [17]. Gordh (personal communication) described his studies as follows:

“Several hundred intracutaneous and subcutaneous tests were carried out in volunteers, using different concentrations of lidocaine versus procaine, all in a double blind fashion. Then 400 clinical infiltration anesthetics were given with lidocaine without adrenaline, and another 405 with addition of adrenaline. Effects were also assessed in brachial plexus blocks, sacral blocks, and spinal anesthetics.”

For the next half century, lidocaine stood as the “gold standard” for safety, enjoying enormous popularity, and providing a template for future local anesthetic synthesis. The full “Lidocaine story” is in “Xylocaine’a discovery, a drama, an industry” by Lundkvist and Sundling’1993, published at the 50-year anniversary of the original synthesis of lidocaine.

A Late-Arriving Ester

Although chloroprocaine was a reversion to the ester linkage, it became popular because of, rather than despite, its inherent instability. Francis Foldes and co-workers demonstrated that plasma esterases degraded chloroprocaine four times faster than procaine, translating to a lethal dose twice that of procaine despite chloroprocaine’s greater potency [19]. Confirming this reduced potential for systemic toxicity, Ansbro et al. found that the incidence of toxic reactions with epidural administration of chloroprocaine was roughly 1/20th that of lidocaine [20]. Chloroprocaine gained widespread use in obstetrics, where its rapid degradation virtually eliminated concerns for fetal exposure.

The Long Acting, Lipid-Soluble Amides and Cardiotoxicity

The next major milestone in local anesthetic history was the development of bupivacaine, a longer-acting, lipid-soluble anesthetic released into clinical practice in Scandinavia in 1963. A similar agent, etidocaine was released shortly thereafter, but failed because it inhibited motor function more than sensory function (i.e., a patient might feel but not be able to move!) In laboratory studies, bupivacaine’s systemic toxicity appeared to be equivalent to that of tetracaine and four times greater than that of mepivacaine (paralleling their relative potency) [21].

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree