Chapter 107

Medical Errors and Patient Safety

Key Patient Safety Concepts and Definitions

An error is a defect in process that arises from a person, the environment in which he or she works, or, most commonly, an interaction between the two. In the field of patient safety, negative outcomes are termed adverse events. Because patients may experience adverse events as a result of their underlying illnesses, preventable adverse events are differentiated from unpreventable adverse events; the former are due to error, whereas the latter are not. Errors that do not result in patient harm are termed near misses and are more common than adverse events. Safety experts understand that near miss events are equally useful to study to prevent future errors. Table 107.E1![]() illustrates the difference between these terms.

illustrates the difference between these terms.

Errors in Complex Systems

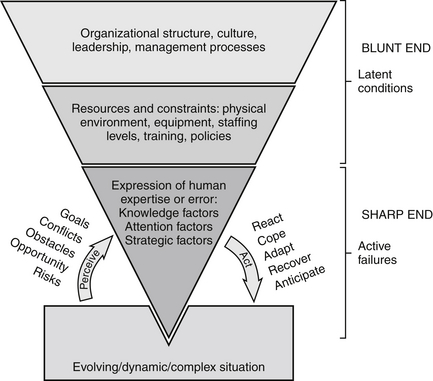

Errors in complex systems can be divided into two types based on where they occur in the system. Active failures occur at the sharp end of a complex system, so named because it contains the people and processes that are easily identified when an adverse event occurs because of their proximity to the patient and the harm. Active failures always involve a human error, such as an act of omission (forgetting) or commission (misperceptions, slips, and mistakes). Research done in human factors has shown that the incidence of human error is predictable based on the nature of a task, the number of steps in a process, and the context in which these occur. Table 107.E2![]() provides examples of these types of errors. Although active failures are easiest to identify, they represent the tip of the iceberg and nearly always rest on deeper and more massive latent conditions in the organization.

provides examples of these types of errors. Although active failures are easiest to identify, they represent the tip of the iceberg and nearly always rest on deeper and more massive latent conditions in the organization.

Latent conditions are defects in a system that make it error-prone. Arising from the physical environment and as unintended consequences of decisions made by organizational leaders, managers, and process designers, latent conditions are the unforeseen blunt end of a system that has “set people up” to fail at the sharp end. Indeed, the majority of near misses and preventable adverse events identify multiple causative latent conditions. For example, consider an investigation of a central line–associated bloodstream infection in the ICU. Several potential latent conditions for this infection are listed in Table 107.E3.![]() Note that if this investigation had focused on active failures alone, it would have stopped short and blamed providers without identifying the underlying latent conditions that allowed the error or preventable infection to occur.

Note that if this investigation had focused on active failures alone, it would have stopped short and blamed providers without identifying the underlying latent conditions that allowed the error or preventable infection to occur.

Figure 107.1 illustrates that on one side, the expression of human error or expertise at the sharp end is governed by the evolving demands of the situation being managed and on the other side by the organizational context in which an individual is operating. Organizational structures and culture at the blunt end of the system determine what resources and constraints people at the sharp end experience, powerfully influencing how well they will be able to use their knowledge, focus their attention, and make decisions during the course of their work (see Figure 107.1).

< div class='tao-gold-member'>

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree