Chapter 29 Error, Man and Machine

Much has been written recently about the lessons that medicine can take from aviation,1–3 but there is resistance to the idea of transferring those ideas from other industries to healthcare. The usual reason given is that patients and their diseases are too complex to adapt to checklists and standard operating procedures. However, it is precisely because of this complexity that tools from aviation such as these can be life-saving. In acknowledgement of this we already do refer to algorithms such as those from the American Heart Association or European Resuscitation Council (ERC) to guide us as to the best practice to follow in resuscitating the patient.4,5

Human factors in aviation

Today, almost 80% of aircraft accidents are due to human performance failures; and the introduction of Human Factors consideration into the management of safety critical systems has stemmed from the recognition of human error as the primary cause in a significant number of catastrophic events worldwide. In the late 1970s, a series of aircraft accidents occurred where, for the first time, the investigation established that there was nothing wrong with the aircraft and that the causal factor was poor decision-making and lack of situational awareness. The nuclear industry (Three Mile Island, Chernobyl) and the chemical industry (Piper Alpha, Bhopal) suffered similar accidents, where it became evident that the same issues, i.e. problem solving, prioritizing, decision-making, fatigue and reduced vigilance, were directly responsible for the disaster. It was clear, however, that the two-word verdict, ‘human error’, did little to provide insight into the reasons why people erred, or what the environmental and systems influences were that made the error inevitable. There followed a new approach to accident investigation that aimed to understand the previously under-estimated influence of the cognitive and physiological state of the individuals involved, as well as the cultural and organizational environment in which the event occurred. The objective of ‘Human Factors’ in aviation, as elsewhere, is to increase performance and reduce error: by understanding the personal, cognitive and organizational context in which we perform our tasks.

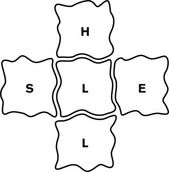

A commonly used diagram, which is useful in forming a basic understanding of the man/machine interface and human factors, is the SHEL model, first described by Edwards in 1972 and later refined by Hawkins in 1975 (Fig. 29.1). Each block represents one of the five components in the relationship, with liveware (human) always being in the central position. The blocks are, however, irregular in shape and must be carefully matched together in order to form a perfect fit. A mismatch highlights the potential for error.

• Liveware–Environment errors could be the product of extremely rushed procedures and items being missed. Long flights and excessive fatigue, or multiple, short busy flights, could also incur error as a direct product of the environment.

• Liveware–Software errors would naturally occur where information is delivered in a misleading or inaccurate manner, i.e. cluttered computer screens, or wrongly calculated weights and loads in published charts.

• Liveware–Hardware errors caused by poor design. Nearly 60 years ago, aircraft gear levers were located behind the pilot’s seat, along with various other handles that were indistinguishable on a dark and stormy night. Mis-selection caused so many accidents that a mirror was installed on the forward panel, in an attempt to help the pilot see the levers! It was not until Boeing realized that the levers should be at the front and directly visible that these particular accidents ceased. The design was further modified by varying the shape of the individual levels, thus providing the pilot with additional tactile confirmation. An extra margin of safety had thereby been introduced into the critical function of gear selection.

• Liveware–Liveware errors as a direct by-product of the quality of the team relationship, interaction, leadership and information flow (see Shiva factor below).

Understanding error

Aircraft accidents are seldom due to one single catastrophic failure. Investigations invariably uncover a chain of events – a trail of errors, where each event was inexorably linked to the next and each event was a vital contributor to the outcome. The advantage in this truth is that early error detection and containment can prevent links from ever forming a chain. Errors can be defined as lapses, slips and misses, or errors of omission and errors of commission (see Appendix 1 for definition of these terms in the context of the study of error). Some errors occur as a direct result of fatigue, where maintaining vigilance becomes increasingly difficult. Conversely, others are a product of condensed time frames, where items are simply missed. Whatever its source, it must be recognized that error is forever present in both operational and non-operational life. High error rates tell a story; they are indicative of a system that either gives rise to, or fails to prevent, them. In other words, when errors are identified, it should be appreciated that they are the symptoms and not the disease.

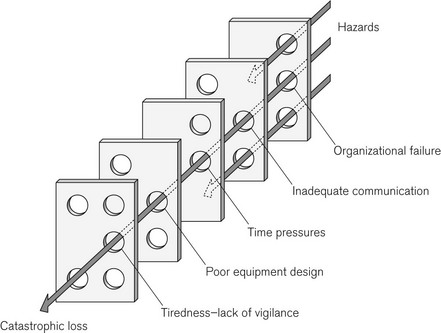

The ‘Swiss Cheese analogy’ is often used to explain how a system that is full of holes (ubiquitous errors, systems failures, etc.) can appear solid for most of the time. Thankfully, it is only rarely that the holes line up or cluster (e.g. adverse environmental circumstance + error + poor equipment design + fatigue) such that catastrophic failure occurs (Fig. 29.2). Error management aims to reduce the total number of holes, such that the likelihood of clustering by chance is reduced (see below).

Figure 29.2 The Swiss Cheese Model, originally by James Reason,6 demonstrates the multifactorial nature of a sample ‘accident’ and can be used to explain how latent conditions for an incident may lie dormant for a long time before combining with other failures (or viewed differently: breaches in successive layers of defences) to lead to catastrophe. As an example the organizational failure may be the expectation of an inadequately trained member of staff to perform a given task.

Root causes of adverse events

Once the catalyst event has occurred and the system faults have contributed to, rather than mitigated the error, the patient or passengers must now rely on human beings within the system to prevent harm. The greatest tool in aviation and in medicine is situational awareness: the ability to keep the whole picture in focus, to not get so lost in detail that one forgets to fly the plane or to prioritize patient ventilation over achieving tracheal intubation.7

An example from aviation

The Captain was under pressure from the airline dispatcher to return the plane to the USA, so he continued, sometimes exerting as much as 150 pounds of force on the jackscrew assembly in order to maintain control of the aircraft. What the crew did not know was that when the MD 80 was designed, dissimilar metals were used in the construction of the jackscrew assembly. Because this assembly experienced heavy flight loads, the softer metal wore at a greater rate than the harder metal, and so the assembly became loose (Catalyst event).